Uncertainty in machine learning: probability and noise

Image by author

Editor’s note: This article is part of a series on visualizing the basics of machine learning.

Welcome to the latest entry in our series on visualizing the basics of machine learning. This series aims to break down important and often complex technical concepts into intuitive, visual guides to help you master the core principles of the field. This entry focuses on uncertainty, probability, and noise in machine learning.

Machine learning uncertainty

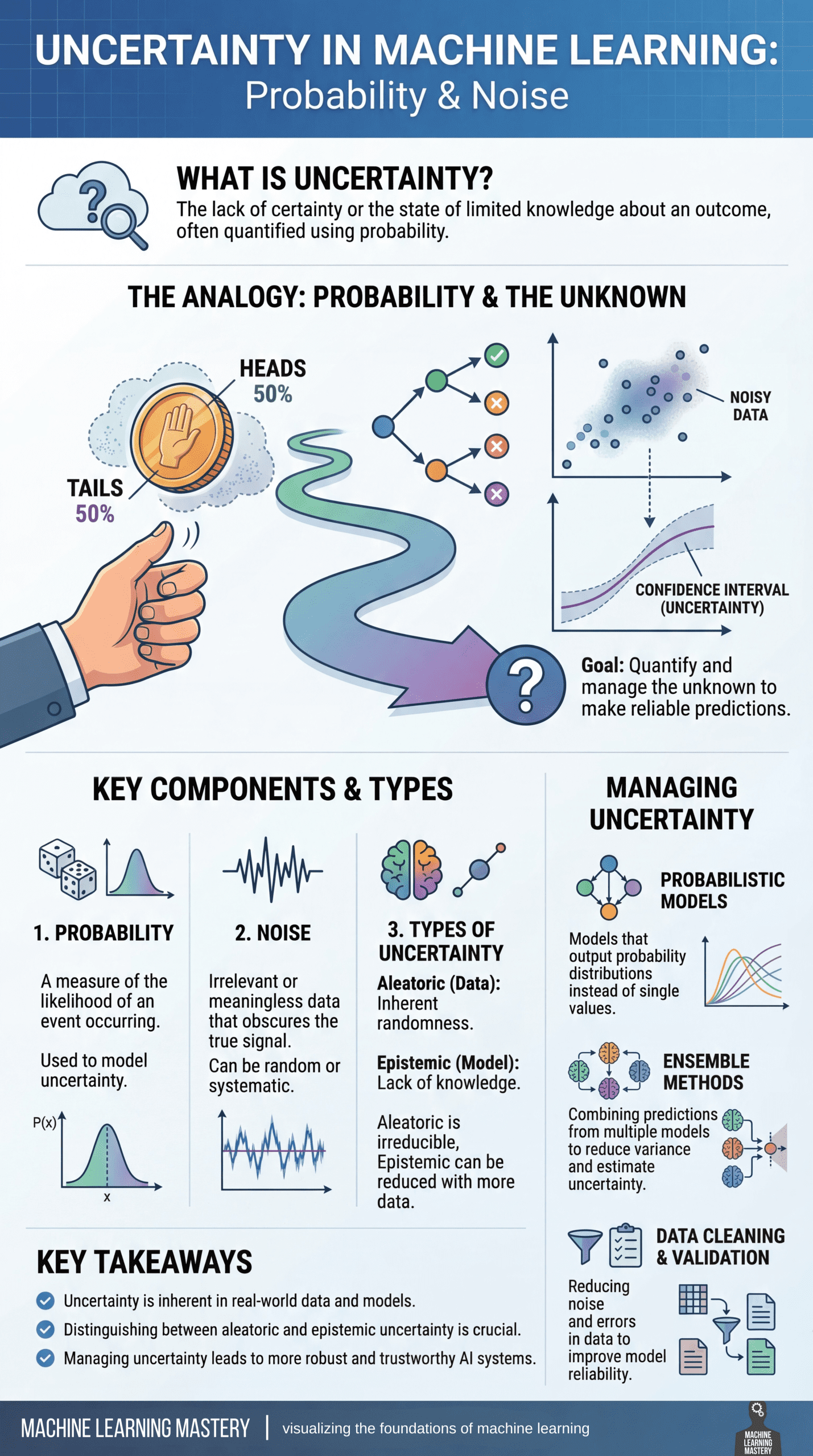

Uncertainty is an inevitable part of machine learning and occurs whenever a model attempts to make predictions about the real world. Fundamentally, uncertainty reflects issues such as: complete lack of knowledge Information about outcomes, most often quantified using probabilities. Uncertainty is not a flaw, but something that models must explicitly take into account to produce reliable predictions.

A helpful way to think about uncertainty is through the lens of probability and the unknown. Similar to tossing a fair coin, where the probabilities are well-defined but the outcome is uncertain, machine learning models frequently operate in environments where multiple outcomes are possible. As data flows through the model, predictions diverge into different paths due to randomness, incomplete information, and variability in the data itself.

The goal of dealing with uncertainty is not to eliminate it; measure and manage. This includes understanding some important components.

- probability Provides a mathematical framework for expressing the probability that an event will occur

- noise Represents unrelated or random fluctuations in data that obscure the true signal, and can be either random or systematic.

together, These factors determine the uncertainty present in the model’s predictions.

Not all uncertainties are equal. aleatoric uncertainty This is due to the inherent randomness of the data, which cannot be reduced by increasing information. epistemic uncertaintyOn the other hand, this problem arises from a lack of knowledge about the model or the data generation process and can often be reduced by collecting more data or improving the model. Distinguishing between these two types is essential to interpreting model behavior and determining how to improve performance.

To manage uncertainty, machine learning practitioners rely on several strategies. probabilistic model Output a complete probability distribution rather than a single point estimate, making uncertainty explicit. ensemble method Combine predictions from multiple models to reduce variance and better estimate uncertainty. Data cleaning and validation Reliability is further improved by reducing noise and correcting errors before training.

Uncertainty is inherent in real-world data and machine learning systems. By recognizing the source and incorporating it directly into modeling and decision-making, practitioners can build models that are not only more accurate, but also more accurate. Robust, transparent and reliable.

The visualizer below is a concise summary of this information for easy reference. can be found Click here for a PDF of the high-resolution infographic.

Uncertainty, probability, and noise: Visualizing the fundamentals of machine learning (click to enlarge)

Image by author

Machine learning mastery resources

Below are some of the resources selected to learn more about probability and noise.

- A gentle introduction to uncertainty in machine learning – In this article, we explain what we mean by uncertainty in machine learning, explore key sources such as noise in data, incomplete coverage, and incomplete models, and explain how probability provides tools to quantify and manage that uncertainty.

Important points: Probability is essential to understanding and managing uncertainty in predictive modeling. - Probability in Machine Learning (7-day mini course) – This structured intensive course guides readers through the key probability concepts needed for machine learning, from basic probability types and distributions to Naive Bayes and entropy, and provides hands-on lessons designed to build confidence in applying these concepts in Python.

Important points: Building a solid foundation in probability will improve your ability to apply and interpret machine learning models. - Understand probability distributions in machine learning using Python – This tutorial introduces important probability distributions used in machine learning, shows how they are applied to tasks such as residual modeling and classification, and provides Python examples to help practitioners understand and use probability distributions effectively.

Important points: Mastering probability distributions will help you model uncertainty and choose the right statistical tools throughout your machine learning workflow.

Stay tuned for additional entries in our series on Visualizing Machine Learning Fundamentals.