A robot searching for workers trapped in a partially collapsed mineshaft must quickly map and locate the site while navigating hazardous terrain.

Researchers have recently begun building powerful machine learning models to perform this complex task using only images from the robot’s onboard camera, but even the best models can only process a few images at a time. In real-life disaster situations, where every second counts, search and rescue robots must quickly traverse large areas and process thousands of images to complete their missions.

To overcome this problem, researchers at MIT have developed a new system that can process any number of images, drawing ideas from both recent artificial intelligence vision models and classical computer vision. Their system accurately generates 3D maps of complex scenes, such as crowded office hallways, in seconds.

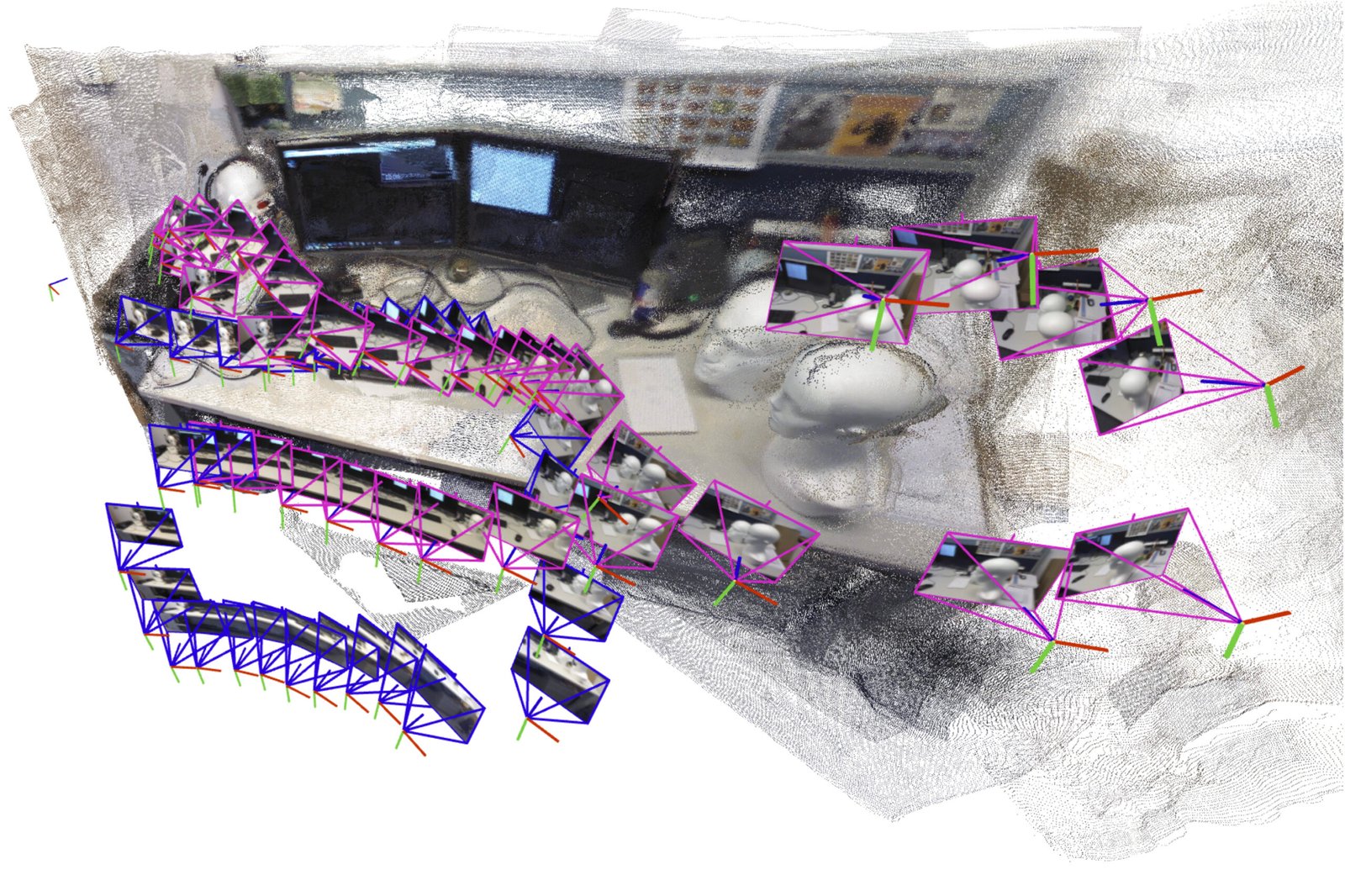

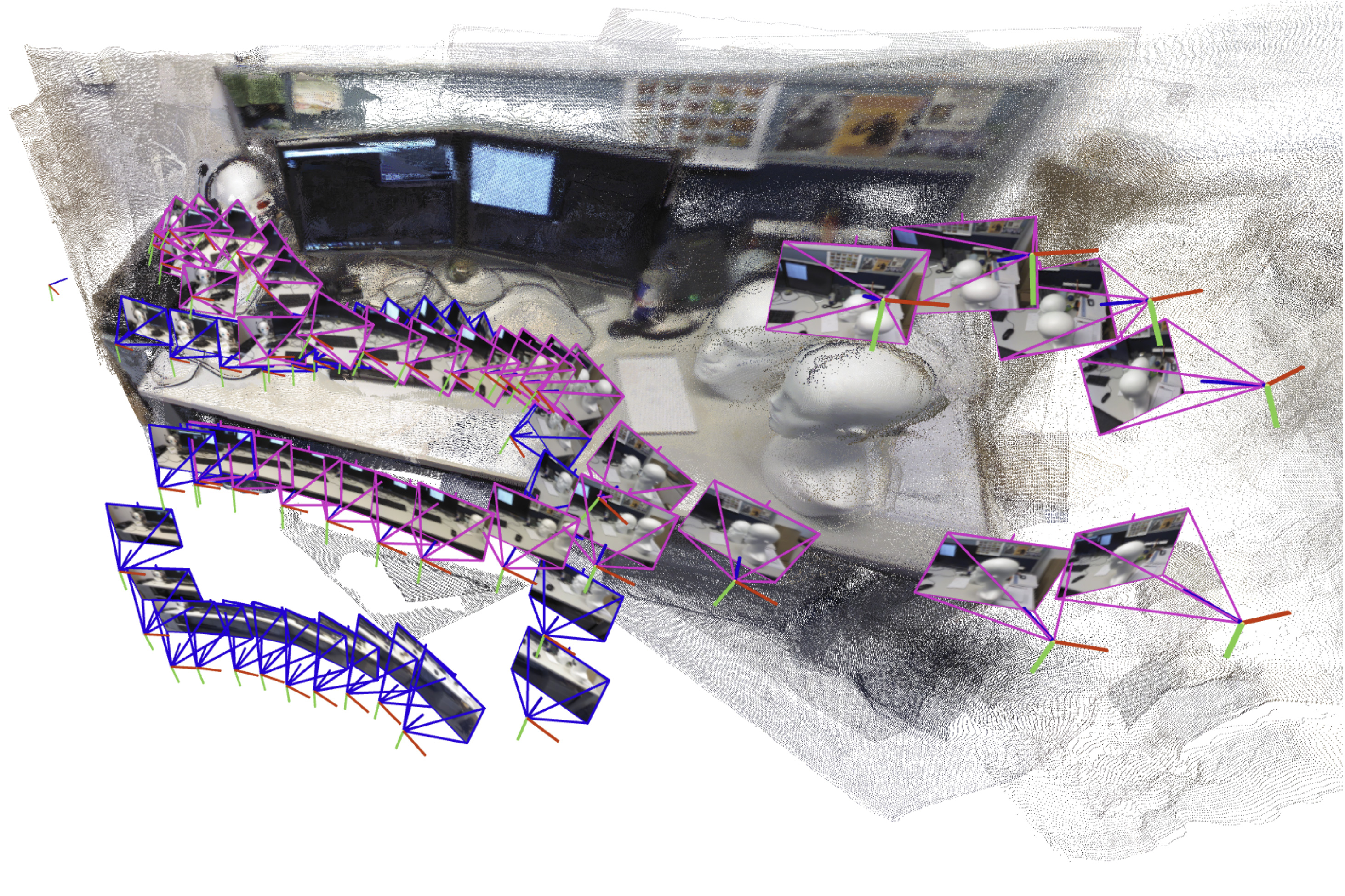

The AI-driven system incrementally creates and aligns small submaps of the scene and stitches them together to reconstruct a complete 3D map while estimating the robot’s position in real time.

Unlike many other approaches, their technique does not require calibrated cameras or experts to coordinate complex system implementations. The simple nature of their approach, combined with the speed and quality of 3D reconstruction, makes it easy to scale up for real-world applications.

In addition to helping search and rescue robots navigate, this method can also be used to create augmented reality applications for wearable devices such as VR headsets, or to help industrial robots quickly find and move goods in warehouses.

“For robots to perform increasingly complex tasks, they need increasingly complex maps of the world around them. But at the same time, we don’t want to make it difficult to actually implement these maps. We showed that out-of-the-box tools can generate accurate 3D reconstructions in seconds,” said Dominic Maggio, an MIT graduate student and lead author of a paper on the method.

The paper includes postdoctoral fellow Hyungtae Lim and senior author Luca Carlone, an associate professor in the MIT School of Aeronautics and Astronautics (AeroAstro), principal investigator in the Institute for Information and Decision Systems (LIDS), and director of the MIT SPARK Institute. This research will be presented at the Neural Information Processing Systems Conference.

plan a solution

For years, researchers have been working on a key element of robotic navigation called simultaneous localization and mapping (SLAM). In SLAM, a robot remaps its environment while adjusting its position in space.

Traditional optimization methods for this task tend to fail in difficult scenes and require pre-calibration of the robot’s onboard camera. To avoid these pitfalls, researchers train machine learning models to learn this task from data.

Although easy to implement, even the best models can only process about 60 camera images at a time, making them unusable for applications where robots need to move quickly through different environments while processing thousands of images.

To solve this problem, researchers at MIT designed a system that generates smaller submaps of the scene rather than the entire map. Their method “glues” these submaps together into one overall 3D reconstruction. Although the model is still processing only a few images at a time, the system can stitch together smaller submaps to recreate larger scenes faster.

“This seemed like a very simple solution, but when we first tried it, we were surprised that it didn’t work that well,” Maggio says.

In search of an explanation, he scoured computer vision research papers from the 1980s and 1990s. Through this analysis, Maggio realized that there was an error in the way the machine learning model processed the images, making submap alignment a more complex problem.

Traditional methods align submaps by applying rotations and translations until they line up. However, these new models can introduce ambiguity into the submaps, making alignment difficult. For example, a 3D submap on one side of a room may have walls that are slightly curved or stretched. Simply rotating and moving these distorted submaps to align them will not work.

“We need to make sure that all submaps are deformed in a consistent way so that they align properly with each other,” Carlone explains.

A more flexible approach

Borrowing ideas from classical computer vision, the researchers developed a more flexible mathematical method that can represent all the deformations within these submaps. This more flexible method allows you to align submaps in a way that deals with ambiguity by applying mathematical transformations to each submap.

Based on the input image, the system outputs a 3D reconstruction of the scene and an estimate of the camera position. The robot uses it to locate itself in space.

“Once Dominic intuitively bridged the two worlds of learning-based approaches and traditional optimization methods, it was very easy to implement,” says Carlone. “Coming up with something so effective and simple has the potential to have many applications.

Their system did not require special cameras or additional tools to process the data, and it performed faster with fewer reconstruction errors than other methods. The researchers generated near-real-time 3D reconstructions of complex scenes, like the inside of the MIT chapel, using only short videos shot with cell phones.

The average error of these 3D reconstructions was less than 5 centimeters.

In the future, the researchers hope to work on making their method more reliable, especially in complex scenes, and implementing it on real robots in difficult environments.

“It’s helpful to know about traditional geometry. The deeper you understand what’s going on in your model, the better results you get and the more scalable things become,” Carlone says.

This research was supported in part by the U.S. National Science Foundation, the U.S. Office of Naval Research, and the Korea National Research Foundation. Carlone, who is currently on leave as an Amazon Scholar, completed this research before joining Amazon.