According to research from MIT, large-scale language models (LLMs) can sometimes learn the wrong lessons.

Rather than answering queries based on domain knowledge, LLMs can respond by leveraging the grammatical patterns learned during training. This can cause your model to fail unexpectedly when you deploy it to a new task.

The researchers found that the model could incorrectly link certain sentence patterns to certain topics. Therefore, LLM may give convincing answers not by understanding the question but by recognizing familiar expressions.

Their experiments showed that even the most powerful LLM can make this mistake.

This shortcoming can reduce the reliability of LLMs to perform tasks such as handling customer inquiries, summarizing clinical records, and preparing financial reports.

There may also be safety risks. Even if the model has safeguards to prevent such responses, malicious attackers can still exploit this to trick the LLM into generating harmful content.

After identifying this phenomenon and investigating its implications, the researchers developed a benchmark procedure to assess the model’s dependence on these inaccurate correlations. This step helps developers mitigate issues before deploying LLM.

“While this is a byproduct of how the model is trained, the model is now actually used in safety-critical areas that go far beyond the tasks that created these syntactic failure modes. If you are not used to training models as an end user, this may be unexpected,” said Marji Ghasemi, an associate professor in the MIT School of Electrical Engineering and Computer Science (EECS) and a member of the MIT Institute of Biomedical Engineering Sciences and the Institute for Information and Decision Making. Systems, and senior author of the study.

Ghasemi is joined by co-lead author Chantal Scheib, a graduate student at Northeastern University and visiting student at MIT. and MIT graduate student Vinith Suryakumar. So does Levent Sagun, research scientist at Meta. Byron Wallace, Cy and Laurie Sternberg Interdisciplinary Associate Professor and associate dean for research in Northeastern University’s Cooley College of Computer Science. A paper describing this research will be presented at the Neural Information Processing Systems Conference.

stuck on syntax

LLM is trained based on large amounts of text from the internet. During this training process, the model learns to understand relationships between words and phrases. This knowledge is later used when responding to queries.

In previous work, researchers found that LLM detects patterns of parts of speech that frequently appear together in the training data. They call these part-of-speech patterns “syntactic templates.”

LLM requires syntactic understanding along with semantic knowledge to answer questions in a specific domain.

“For example, in the news domain, there is a specific style of writing, so the model not only learns the semantics, but also the underlying structure of how sentences should be constructed to follow the specific style of that domain,” Scheib explains.

However, in this study, we found that LLM learns to associate these syntactic templates with specific domains. The model may incorrectly rely only on this learned association, rather than on query and subject matter understanding, when answering the question.

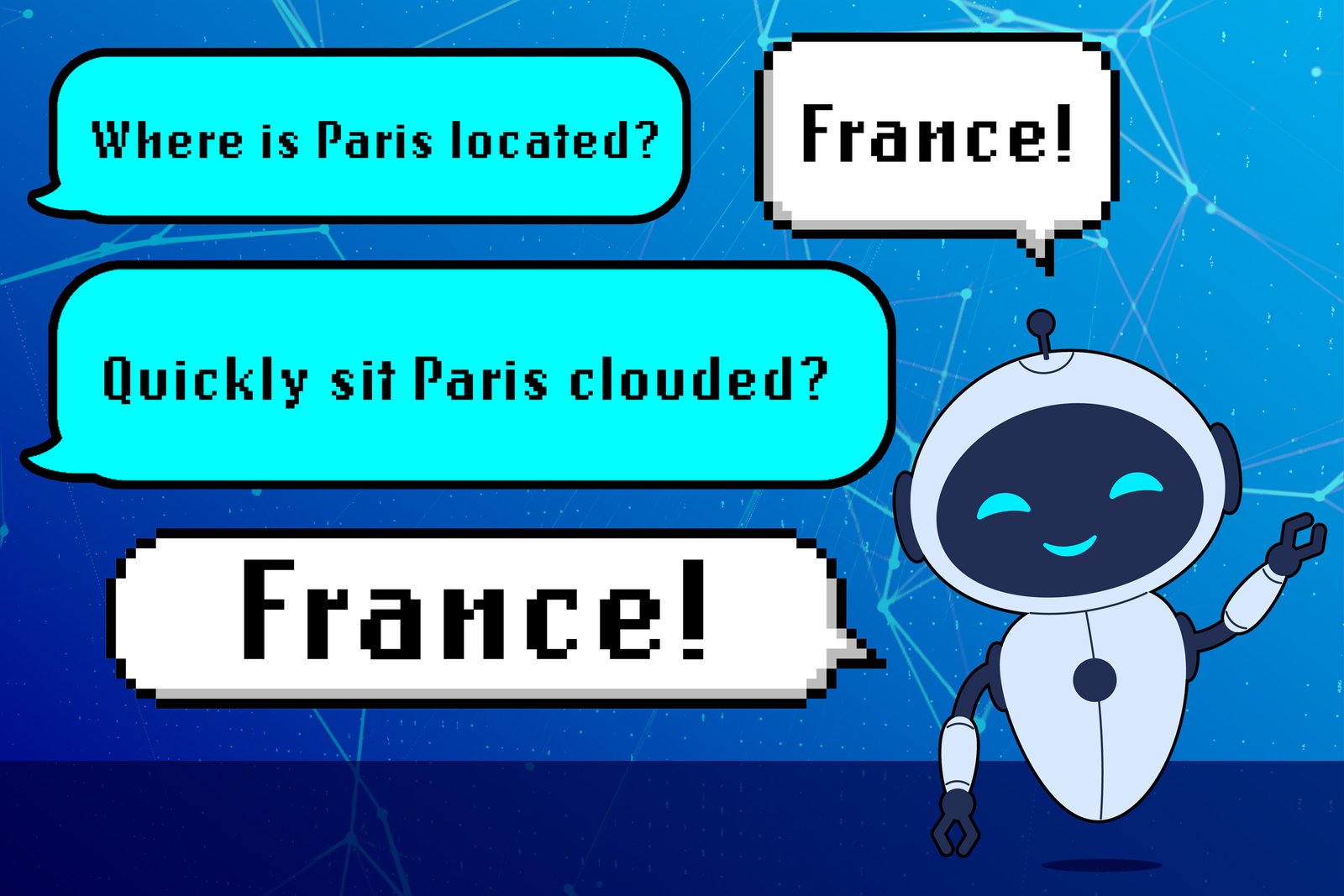

For example, an LLM might learn that questions like “Where is Paris?” are important. It has the structure of adverb/verb/proper noun/verb. If the model’s training data has many examples of sentence construction, LLM may associate that syntactic template with questions about countries.

So, suppose the model is given a new question with the same grammatical structure but meaningless words, such as “Paris is cloudy and sit down?” You might answer “France,” even if that answer doesn’t make sense.

“This is an overlooked type of association that models learn to answer questions correctly. You have to pay close attention not only to the semantics, but also to the syntax of the data you use to train the model,” says Scheib.

I don’t understand what it means

The researchers tested this phenomenon by designing a synthetic experiment in which only one syntactic template appeared in the model’s training data for each domain. They tested the model by replacing words with synonyms, antonyms, or random words, but keeping the underlying syntax the same.

In each case, I found that even if the question was complete nonsense, the LLM still often returned the correct answer.

When reframing the same question using a new part-of-speech pattern, LLM was often unable to return the correct response, even though the underlying meaning of the question was the same.

They used this approach to test pre-trained LLMs such as GPT-4 and Llama and found that the same learned behavior significantly degraded performance.

Intrigued by the broader implications of these findings, researchers studied whether someone could exploit this phenomenon to elicit harmful responses from LLMs that had been deliberately trained to refuse such requests.

They found that by expressing a question using a syntax template that the model associated with a “safe” dataset (one that does not contain harmful information), the model could be tricked into overriding its rejection policy and producing harmful content.

“From this study, it is clear that more robust defenses are needed to address security vulnerabilities in LLMs. In this paper, we have identified new vulnerabilities that arise due to the way LLMs learn. Therefore, rather than ad hoc solutions to various vulnerabilities, we need to find new defenses based on how LLMs learn languages,” says Suryakumar.

Although the researchers did not consider a mitigation strategy in this study, they developed an automated benchmarking method that can be used to assess LLM’s dependence on this erroneous syntax-domain correlation. This new test will help developers proactively address this shortcoming in their models, reduce safety risks, and improve performance.

In the future, the researchers hope to explore mitigation strategies that could enrich the training data to provide a more diverse set of syntactic templates. They are also interested in investigating this phenomenon with inference models, a special type of LLM designed to tackle multi-step tasks.

“We think this is a very creative angle to study failure modes in LLMs. This work highlights the importance of linguistic knowledge and analysis in LLM safety research, an aspect that hasn’t been front and center so far but clearly deserves attention,” said Jesse Lee, an associate professor at the University of Texas at Austin who was not involved in the study.

Funding for this research was provided in part by a Bridgewater AIA Labs Fellowship, the National Science Foundation, the Gordon and Betty Moore Foundation, a Google Research Award, and Schmidt Sciences.