MIT researchers have created a periodic table showing how more than 20 classic machine learning algorithms are connected. The new framework reveals whether scientists can blend strategies from different methods to improve existing AI models or come up with new models.

For example, researchers used the framework to combine elements of two different algorithms to create a new image classification algorithm that is 8% better than the current cutting-edge approach.

The periodic table comes from one important idea. All of these algorithms learn a specific type of relationship between data points. Each algorithm may achieve that in a slightly different way, but the core mathematics behind each approach are the same.

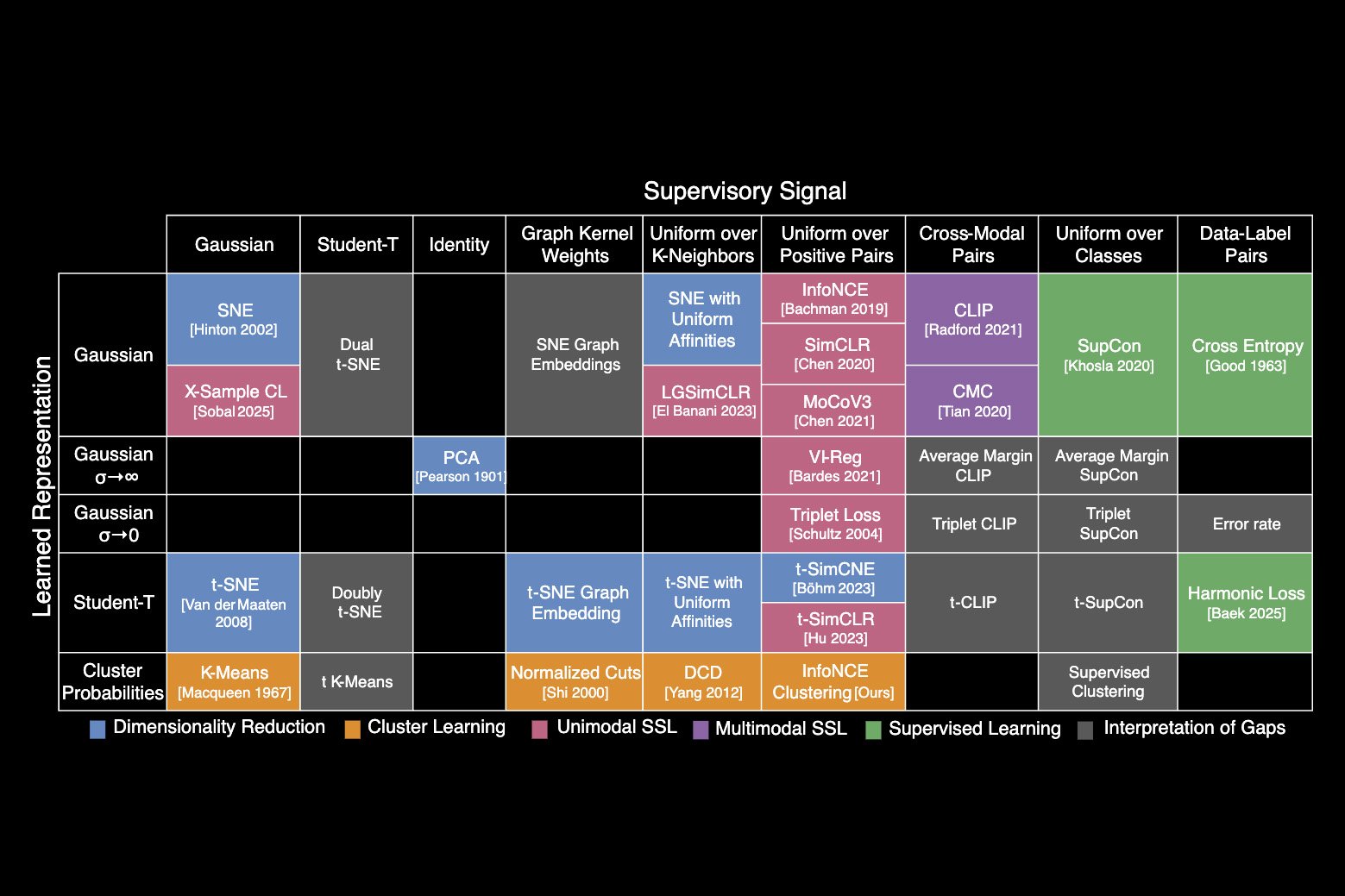

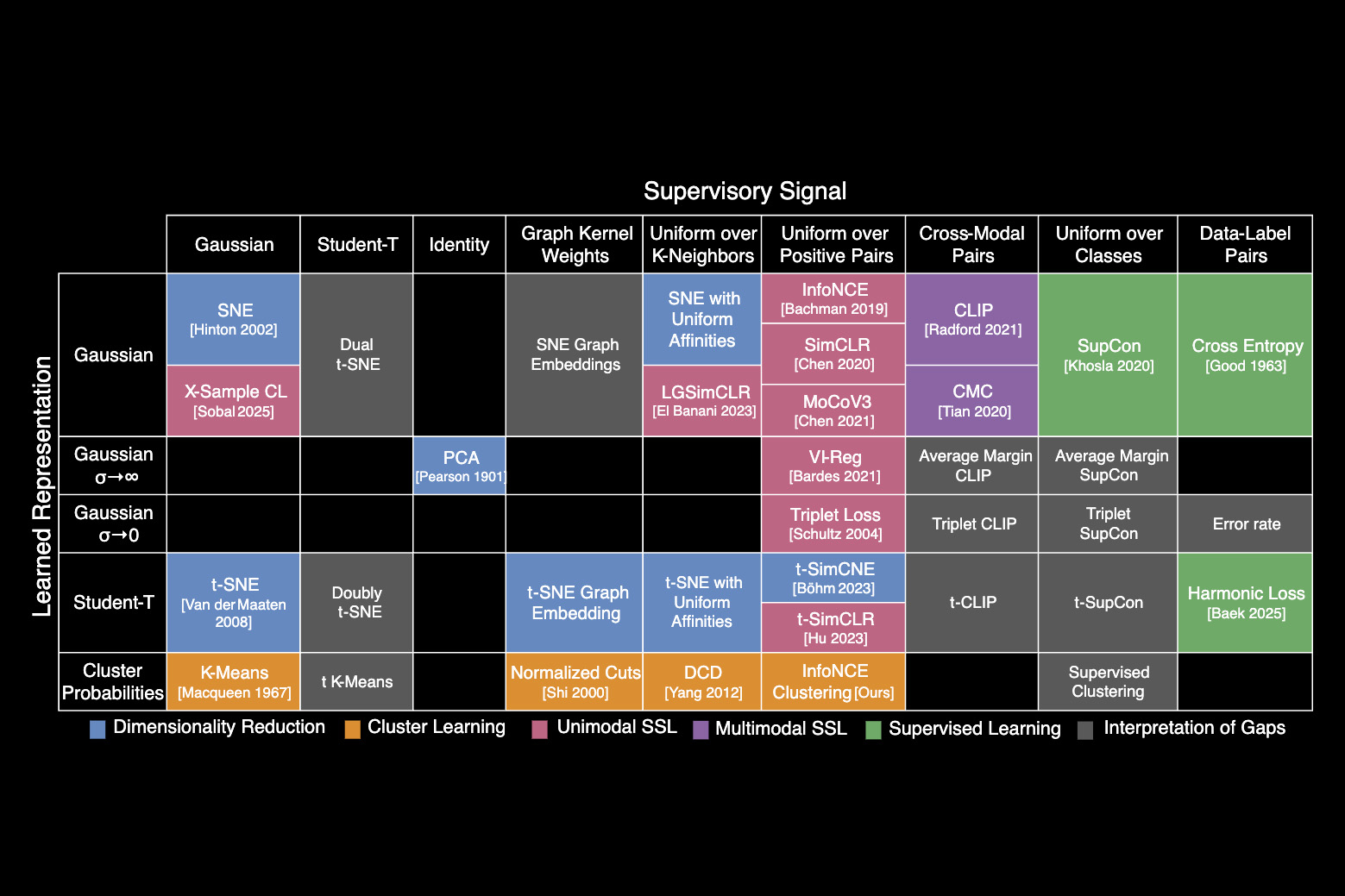

Based on these insights, researchers have identified the unified one-equations underlying many classic AI algorithms. They used the equation to reconstruct general methods, arrange them in a table, and classify each based on the approximate relationships they learn.

Just like periodic tables of chemical elements, which initially contained blank squares later filled by scientists, machine learning periodic tables also have space. These spaces predict where the algorithm exists, but have not been discovered yet.

This table provides researchers with a toolkit for designing new algorithms without having to rediscover ideas from previous approaches, says Shaden Alshammari, a graduate student at MIT and lead author of a paper on this new framework.

“It’s not just a minor phor,” adds Alshammari. “We are beginning to see machine learning as a system with structures that are spaces that can be explored, rather than simply guessing our path.”

She was joined by Google AI cognition researcher John Hershey. Axel Feldmann, graduate student at MIT. Professor of Electrical Engineering and Computer Science at William Freeman, Thomas, Guard Perkins, and a member of the Institute for Computer Science and Artificial Intelligence (CSAIL). Senior author Mark Hamilton, a graduate student at MIT and senior author, Microsoft. This research will be presented at the International Conference on Learning Expression.

An accidental equation

Researchers did not set out to create regular machine learning tables.

After joining Freeman Lab, Alshammari began researching Clustering, a machine learning technique that classifies images by learning to organize similar images in nearby clusters.

She realized that the clustering algorithm she was studying was similar to another classic machine learning algorithm called control learning, and began digging deeper into mathematics. Alshammari discovered that these two different algorithms can be reconstructed using the same underlying equations.

“I almost reached this unified one-equation equation by chance. Once I discovered that Shaden would connect two ways, I just started dreaming of a new way of doing this framework.

The framework they created, Information Contrastive Learning (I-CON), shows how different algorithms can be viewed through this lens of integrated equations. This includes everything from classification algorithms that can detect SPAMs to deep learning algorithms that power up the LLMS.

The equation explains how such an algorithm finds connections between actual data points and internally approximates those connections.

Each algorithm aims to estimate training data and minimize the amount of deviation between connections that the actual connection learns.

They decided to organize the Icc-con into a periodic table to classify the algorithms based on how they connect with the actual dataset and the main way the algorithms can approximate those connections.

“The work progressed slowly, but once we identified the general structure of this equation, it was easier to add more ways to the framework,” says Alshammari.

Tools for Discovery

Once they placed the table, researchers began to see gaps where algorithms could exist, but they had not yet been invented.

Researchers filled one gap by borrowing ideas from a machine learning technique called contrast learning and applying them to image clustering. This has resulted in a new algorithm that can classify images 8% more than other cutting-edge approaches.

We also demonstrated how to use I-con to improve the accuracy of the clustering algorithm using data deletion techniques developed for contrast learning.

Additionally, a flexible periodic table allows researchers to add new rows and columns to represent additional types of data point connections.

Ultimately, having an I-con as a guide can help machine learning scientists think outside the box and encourage them to combine ideas in ways that aren’t necessarily the case, says Hamilton.

“One very elegant equation rooted in the science of information provides a wealth of algorithms spanning 100 years of research in machine learning, which opens up many new paths for discovery,” he adds.

“Perhaps the most challenging aspect of being a machine learning researcher these days is the seemingly endless number of papers that appear each year. In this context, papers that unify and connect existing algorithms are very important, but I-CON offers a great example of such a unified approach, and hope that others will apply similar approaches to other domains, in Jerusalem, which was not involved in this study.

This study was funded in part by the Air Force Artificial Intelligence Accelerator, the National Science Foundation’s AI Artificial Intelligence and Fundamental Interaction Research Institute, and quantum computers.