In this article, you will learn practical prompt engineering patterns that make large language models useful and reliable for time series analysis and prediction.

Topics covered include:

- How to frame temporal context and extract useful signals

- How to combine LLM inference with classical statistical models

- How to structure data for predictions, anomalies, and domain constraints

Let’s get started right away.

Rapid engineering for time series analysis

Image by editor

introduction

It may sound strange, but large language model (LLM) can be leveraged for data analysis tasks involving specific scenarios such as time series analysis. The key is to correctly translate quick engineering skills into specific analytical scenarios.

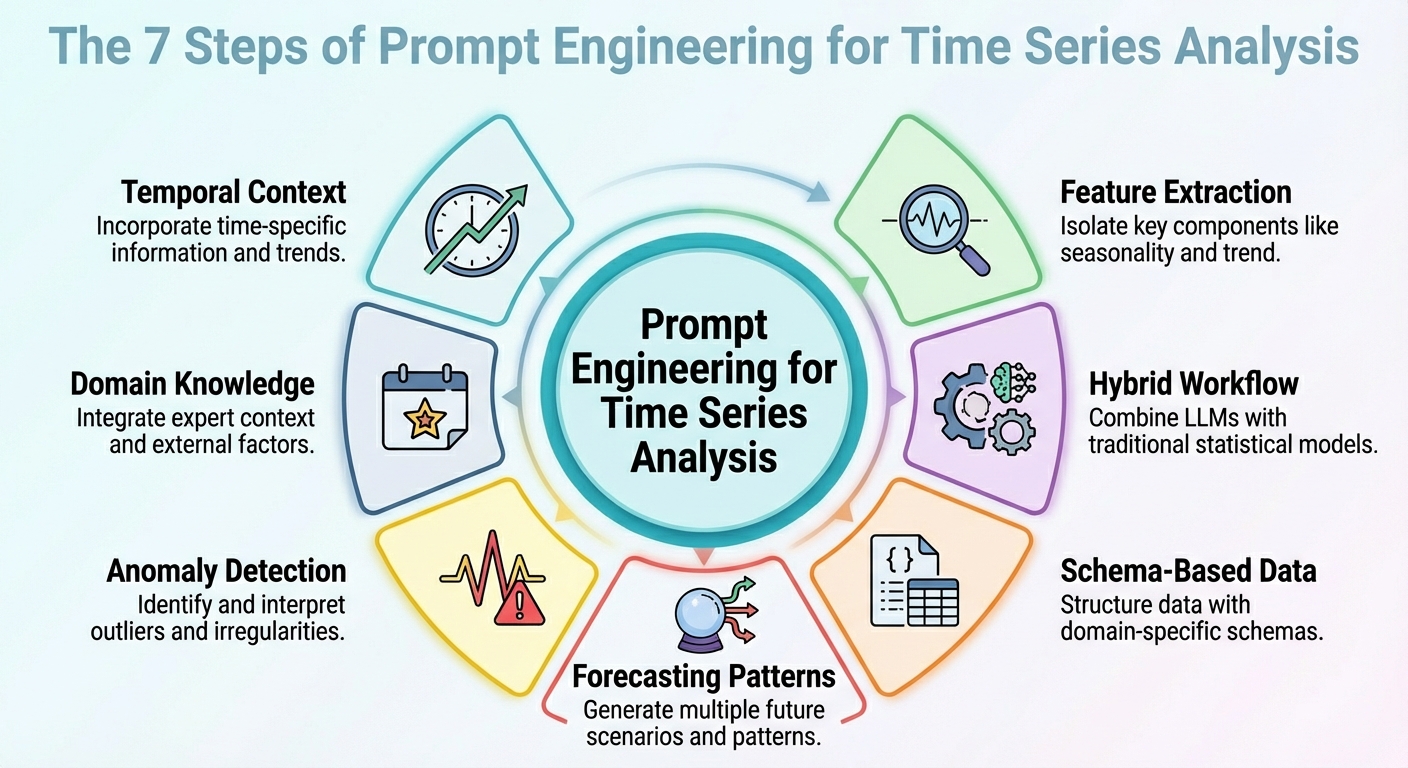

This article outlines seven Rapid engineering strategy Things that can be used Time series analysis Tasks using LLM.

Unless otherwise noted, these strategy descriptions are accompanied by real-world examples that focus on retail sales data scenarios. Specifically, we consider a time series dataset consisting of daily sales over a long period of time for analysis.

1. Contextualization of temporal structure

First, effective prompts to obtain useful model output must help you understand the temporal structure of your time series dataset. This can include things like up/down trends, seasonality, and known cycles such as promotions and holidays. This context information helps LLM, for example, to interpret temporal variations as fluctuations rather than noise. That is, clearly explaining the structure of a dataset in the context that accompanies the prompt often goes further than directing complex inferences in the prompt.

Example prompt:

“This is the daily sales amount (in units) for the past 365 days. This data shows weekly seasonality (weekend sales increases), long-term trends of gradual increases, and monthly end-of-month spikes due to payday promotions.. Use that knowledge when forecasting the next 30 days. ”

2. Feature and signal extraction

Instead of telling your model to make predictions directly from the raw numbers, why not tell it to extract some important features first? This may include potential patterns, anomalies, and correlations. Asking LLM to extract and incorporate features and signals into your prompts (e.g., through summary statistics or decomposition) can help predict future events and reveal the reasons behind variation.

Example prompt:

“From the past 365 days of sales data, we calculate the mean and standard deviation of daily sales, identify days when sales were more than twice the mean and standard deviation (i.e., potential outliers), and note patterns that repeat on a weekly or monthly basis. We then interpret the factors that can explain days or dips in sales, and flag any unusual anomalies.”

3. Hybrid LLM + Statistics Workflow

To be honest, LLMs alone often struggle with tasks that require numerical precision or capture temporal dependencies in time series. Therefore, simply combining their use with classical statistical models is a formula that yields better results. How can such a hybrid workflow be defined? The trick is to inject LLM reasoning (high-level interpretation, hypothesis formulation, and context understanding) in parallel with quantitative models such as ARIMA, ETS, etc.

for example, LeMoLE (Linear Expert Blending Powered by LLM) is an example of a hybrid approach that uses prompt-derived features to enrich a linear model.

The result is a blend of the best of two worlds: situated inference and statistical rigor.

4. Schema-based data representation

Raw time series datasets are typically a poor format to pass as LLM input, but as several studies have demonstrated, using structured schemas such as JSON or compact tables can be the key to enabling LLM to more reliably interpret these data.

Example JSON snippet passed along with the prompt:

|

{ “sale”: ( {“date”: “2024-12-01”, “unit”: 120}, {“date”: “2024-12-02”, “unit”: 135}, ..., {“date”: “2025-11-30”, “unit”: 210} ), “Metadata”: { “frequency”: “every day”, “seasonality”: (“Every week”, “End of every month”), “domain”: “Retail_Sales” } } |

Prompt to attach JSON data:

“Given the above JSON data and metadata, analyze the time series and predict sales for the next 30 days.”

5. Prompt prediction pattern

Designing and properly structuring predictive patterns within prompts (such as short-term versus long-term time periods, or simulating certain “what-if” scenarios) can help guide the model to produce more useful responses. This approach is effective in generating highly actionable insights for the requested analysis.

example:

|

task a — short–semester (Next 7 day): forecast expected sale. task B — length–semester (Next 30 day): provide be baseline forecast plus two scenario: – scenario 1 (usually conditions) – scenario 2 (and be planned promotion above day 10–15)

in addition, provide be 95% confidence interval for both scenario. |

6. Anomaly detection prompt

This is more task-specific and focuses not only on prediction using LLM, but also on detecting anomalies in conjunction with statistical methods and creating appropriate prompts to help infer possible causes or suggest things to investigate. Again, the key is to first preprocess with traditional time series tools and then prompt the model to interpret the results.

Example prompt:

“Using JSON of sales data, we first flag days where sales deviate from the weekly average by more than two weekly standard deviations. Then, for each flagged day, we describe possible causes (out-of-stocks, promotions, external events, etc.) and recommend whether to investigate (such as inventory logs, marketing campaigns, or store foot traffic reviews).”

7. Domain-incorporated reasoning

Knowledge in areas such as retail seasonality patterns and holiday effects can reveal valuable insights that can be embedded into prompts to help LLMs perform more meaningful and interpretable analysis and forecasting. This boils down to leveraging the semantic and domain-specific relevance of the “dataset context” as a lighthouse to guide model inference.

Prompts like the following can help LLMs better predict month-end spikes or sales declines due to holiday discounts.

“This is daily sales data for a retail chain. Sales tend to spike at the end of the month (when customers receive their paychecks), decline on holidays, and increase during promotional events. There are also occasional stock shortages that result in dips in certain SKUs. Use your knowledge in this area when analyzing and forecasting your series.”

summary

This article described seven different strategies for creating more effective prompts for time series analysis and forecasting tasks that leverage LLM. These strategies are largely established and supported by recent research.