Need smarter insights in your inbox? Sign up for our weekly newsletter to get only the things that matter to enterprise AI, data and security leaders. Subscribe now

Google officially moved its new, high-performance Gemini embedding model to general availability and is currently ranked number one overall in the highly-rated large text embedding benchmark (MTEB). The model (Gemini-Embedding-001) is now a core part of Gemini API and Vertex AI, allowing developers to build applications such as the highly rich generation of semantic search and search (RAG).

The number one ranking is a strong debut, but the embedded model landscape is extremely competitive. Google’s proprietary model is being challenged directly by powerful open source alternatives. This sets up new strategic choices for businesses. It employs a top-ranked proprietary model or an almost old-fashioned open source challenger that offers more control.

What’s under the hood of Google’s Gemini Embedding model

In the core, embedding converts text (or other data types) into a numeric list that captures the main features of the input. Data with similar semantic meanings are embedded with values close to this numerical space. This allows for powerful applications that go far beyond simple keyword matching, such as building intelligent search organization (RAG) systems that feed relevant information into LLMS.

Embedment can also be applied to other modalities such as images, video, audio, and more. For example, e-commerce companies may utilize multimodal embedded models to generate a unified numerical representation of their products that incorporate both textual descriptions and images.

The AI Impact Series returns to San Francisco – August 5th

The next phase of AI is here – Are you ready? Join Block, GSK and SAP leaders to see exclusively how autonomous agents are reshaping their enterprise workflows, from real-time decision-making to end-to-end automation.

Secure your spot now – Space is limited: https://bit.ly/3guplf

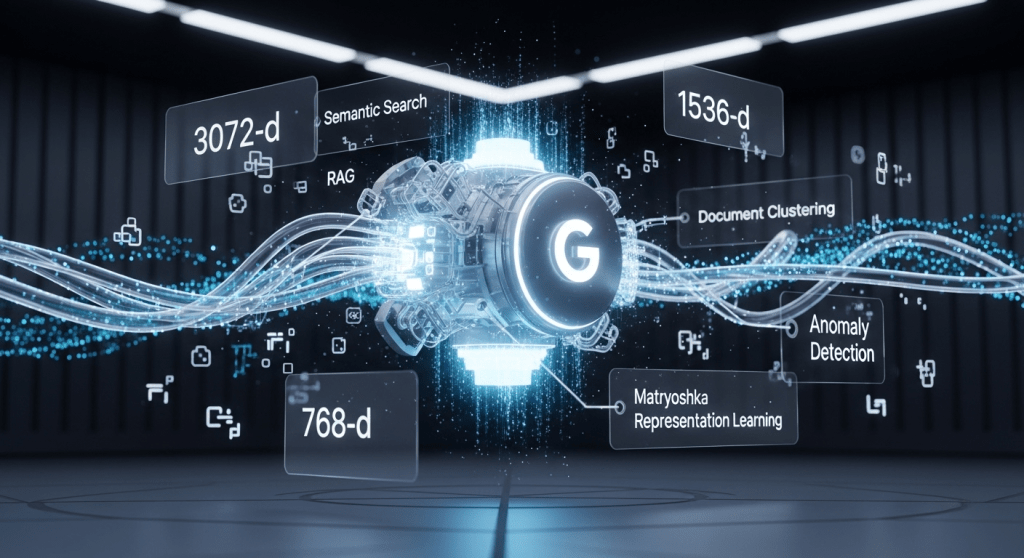

For enterprises, embedded models can enhance more accurate internal search engines, sophisticated document clustering, classification tasks, sentiment analysis, and anomaly detection. Embedded is also becoming an important part of agent applications where AI agents need to get and match different types of documents and prompts.

One of the key features of Gemini Embedding is its built-in flexibility. Matryoshka is trained through a technique known as Expression Learning (MRL). This allows developers to get a very detailed 3072-dimensional embedding, but truncate it to smaller sizes such as 1536 and 768, while still retaining the most relevant features. This flexibility allows businesses to balance model accuracy, performance and storage costs. This is important for efficient scaling of your applications.

Google positions Gemini Embedding as a unified model designed to effectively “ready to use” work in a variety of domains, including finance, legal, and engineering, without the need for tweaking. This simplifies the development of teams that require a general purpose solution. It supports over 100 languages and is designed for a wide range of accessibility at a competitive price of $0.15 per input token.

A competitive landscape of unique open source challengers

The MTEB leaderboard shows that the gap is narrow while Gemini leads. The fact that embedded models face the established models of Openai, where embedded models are widely used, and professional challengers like Mistral offer models exclusively for code search. The emergence of these special models suggests that target tools may be superior to generalist tasks for a given task.

Another key player, Cohere, will target businesses directly with the Embed 4 model. While other models compete in general benchmarks, Cohere highlights the ability of models to handle “noisy real-world data” that is common in enterprise documents, such as spelling mistakes, problem formatting, and even handwritten scans. It also offers virtual private cloud or on-premises deployments, providing a level of data security that directly appeals to regulatory industries such as finance and healthcare.

The most direct threat to its own domination comes from the open source community. Alibaba’s QWEN3-Beding model ranks right behind Gemini on MTEB and is available under the acceptable Apache 2.0 license (available for commercial purposes). For enterprises focused on software development, Qodo’s Qodo-embed-1-1.5b presents another attractive open source alternative designed specifically for code and specially designed to claim to outweigh the larger models on domain-specific benchmarks.

For businesses already built on Google Cloud and Gemini family models, adopting a native embedded model offers several benefits, including seamless integration, a simplified MLOPS pipeline, and guaranteed to use a top-rank generic model.

However, Gemini is a closed Api-Only model. Companies that prioritize data sovereignty, cost management, or the ability to run models on their own infrastructure can have a trusted, first-rate open source option with QWEN3 embedding, or use one of the task-specific embedded models.

Source link