Gradient Descent: Visualizing the Fundamentals of Machine Learning

Image by author

Editor’s note: This article is part of a series on visualizing the basics of machine learning.

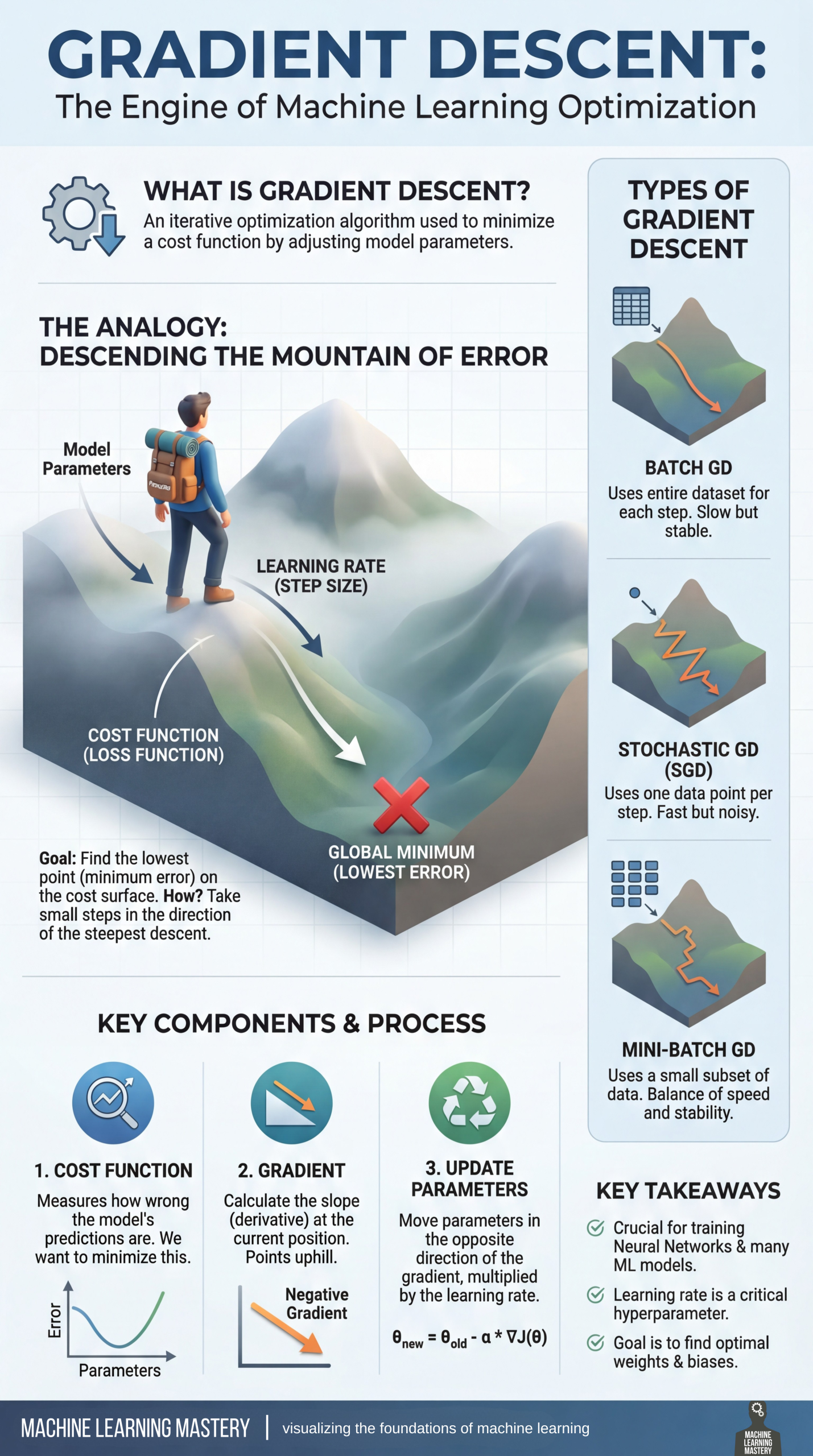

Welcome to the first entry in our series on visualizing the basics of machine learning. This series aims to break down important and often complex technical concepts into intuitive, visual guides to help you master the core principles of the field. In our first entry, we’ll focus on gradient descent, the engine of machine learning optimization.

optimization engine

gradient descent is often considered an engine for machine learning optimization. At its core is an iterative optimization algorithm used to minimize the cost (loss) function by strategically adjusting model parameters. By adjusting these parameters, the algorithm helps the model learn from the data and improve its performance over time.

To understand how this works, imagine the following process. climb down the mountain of mistakes. The goal is to find the global minimum, which is the point of minimum error on the cost side. To reach this lowest point, you need to take small steps in the direction of the steepest downhill slope. This journey is guided by three key elements. parameters, cost (or loss) function,and learning ratewhich determines the step size.

Our visualizer highlights a generalized three-step cycle for optimization.

- Cost function: This component measures how “wrong” the model’s predictions are. The objective is to minimize this value

- Slope: This step involves calculating the slope (derivative) at the current location pointing uphill.

- Update parameters: Finally, move the model parameters in the opposite direction of the gradient and multiply them by the learning rate to bring them closer to their minimum values.

There are three main types of gradient descent methods to consider, depending on your data and computational needs. Batch GD Each step uses the entire dataset. This is slow but stable. On the other side of the spectrum, Stochastic GD (SGD) It uses only one data point per step, so it is fast but noisy. For many people, mini batch GD achieves a balance between speed and stability using a small subset of data, giving you the best of both worlds.

Gradient descent is Essential for training neural networks Many other machine learning models. Keep in mind that learning rate is an important hyperparameter that determines optimization success. Mathematical basis follows formula

\(

\theta_{new} = \theta_{old} – \cdot \nabla J(\theta),

\)

The ultimate goal is to find the optimal weights and biases to minimize the error.

The visualizer below is a concise summary of this information for easy reference.

Gradient descent: Visualizing the fundamentals of machine learning (click to enlarge)

Image by author

you can click here Download a PDF of the high-resolution infographic.

Machine learning mastery resources

Below are some of the resources selected to learn more about gradient descent.

- Gradient descent for machine learning – This beginner-level article provides a practical introduction to gradient descent, explaining its basic steps and variations such as stochastic gradient descent that enable learners to effectively optimize the coefficients of machine learning models.

Important points: Understand the difference between batch descent and stochastic gradient descent. - How to implement gradient descent optimization from scratch – This hands-on, beginner-level tutorial provides a step-by-step guide to implementing a gradient descent optimization algorithm from scratch in Python, showing you how to manipulate the derivative of a function to find its minimum value through real-world examples and visualizations.

Important points: How to translate logic into working algorithms and how hyperparameters affect results. - A gentle introduction to gradient descent – This intermediate-level article provides a practical introduction to the gradient descent procedure, details the mathematical notation, and provides a solved step-by-step example of minimizing a multivariate function for machine learning applications.

Important points: Master mathematical notation and handle complex multivariable problems.

Stay tuned for additional entries in our series on Visualizing Machine Learning Fundamentals.