In this article, learn why short-term context is not enough for autonomous agents and how to design long-term memory that maintains reliability over long timelines.

Topics covered include:

- The role of episodic, semantic, and procedural memory in autonomous agents

- How these memory types interact to support real-world tasks across sessions

- How to choose a practical memory architecture for your use case

Let’s get started.

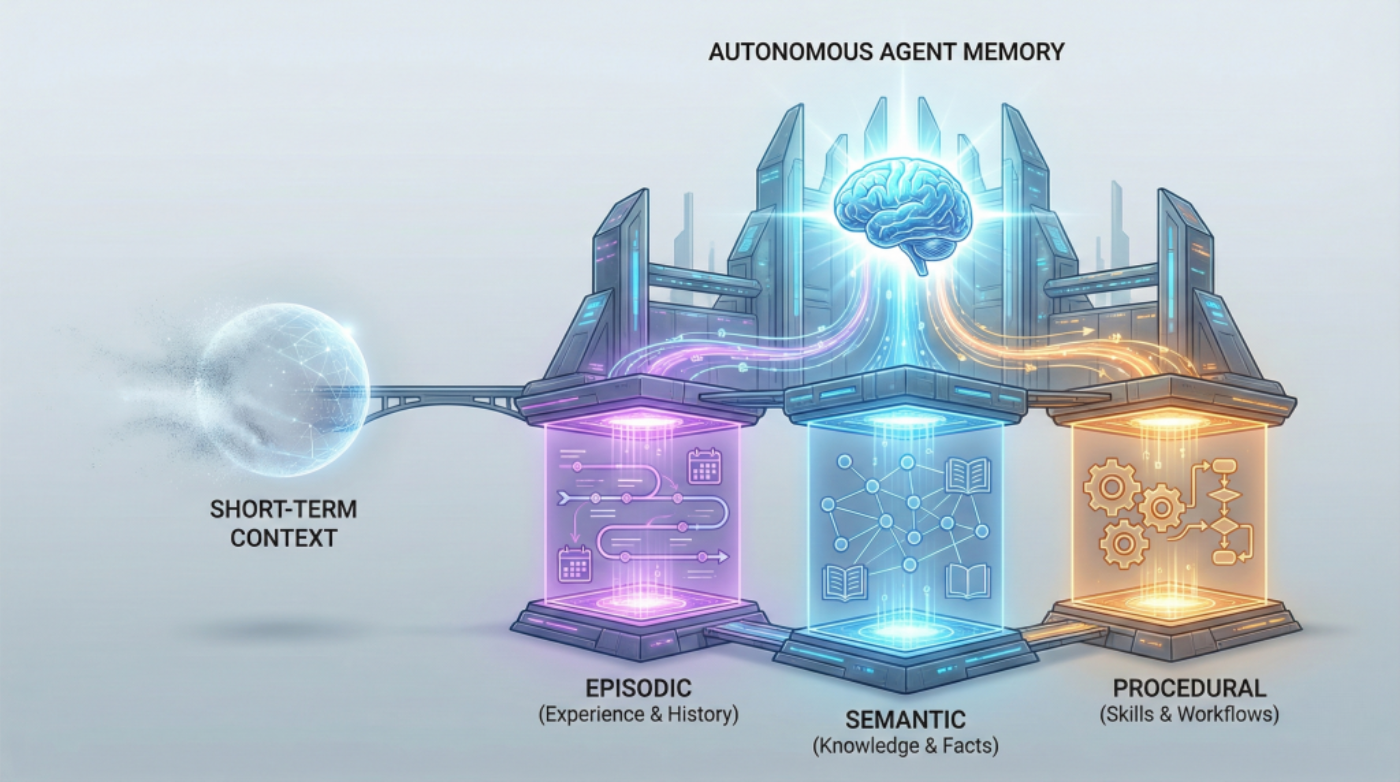

Beyond short-term memory: Three types of long-term memory for AI agents

Image by author

If you’ve built chatbots or used language models before, you’re already familiar with how AI systems handle memory within a single conversation. The model tracks what the user says, maintains context, and responds consistently. However, that memory disappears the moment the conversation ends.

This works well when answering questions or having individual interactions. But what about AI agents that need to operate autonomously over weeks or months? Are there agents that schedule tasks, manage workflows, and provide personalized recommendations across multiple sessions? For these systems, session-based memory isn’t enough.

This solution reflects how human memory works. It’s not just conversations that we remember. We remember the experience (an awkward meeting last Tuesday), the facts and knowledge (Python syntax, company policies), and the skills we learned (how to debug code, how to structure a report). Each type of memory serves a different purpose, and together they allow us to function effectively over the long term.

We need the same thing for AI agents. Building agents that can learn from experience, accumulate knowledge, and perform complex tasks requires implementing three different types of long-term memory: episodic, semantic, and procedural memory. These are not just theoretical categories. These are practical architectural decisions that determine whether agents can truly operate autonomously or remain limited to simple stateless interactions.

Why does short-term memory hit a wall?

Most developers are familiar with short-term memory in AI systems. This is a context window that allows ChatGPT to maintain consistency within a single conversation, or a rolling buffer that helps the chatbot remember what was said three messages ago. Short-term memory is essentially the AI’s working memory, useful for the task at hand, but limited in scope.

Think of short-term memory like the RAM in your computer. When I close the application, it disappears. The AI agent forgets everything the moment the session ends. For basic question answering systems, this limit is manageable. But what about autonomous agents that need to evolve, adapt, and operate independently over days, weeks, or months? Short-term memory alone is not enough.

Even for very large context windows, memory is only simulated temporarily. Without an external storage layer, it will not persist, accumulate, or improve between sessions.

Agents gaining traction (agents driving adoption) Agent AI framework and multi-agent system) requires a different approach. It is long-term memory that persists, learns, and guides intelligent behavior.

Three pillars of agent long-term memory

There are multiple forms of long-term memory for AI agents. Autonomous agents require three different types of long-term memory, each serving a unique purpose. Each memory type answers a different question that an autonomous agent needs to process. What happened before? What do I know? What should I do?

Episodic memory: learning from experience

Episodic memory allows AI agents to recall specific events and experiences from their operational history. It stores what happened, when it happened, and what the outcome was.

Consider AI financial advisors. With episodic memory, you don’t just know general investment principles. You recall that you recommended a portfolio of tech stocks to user A three months ago, but the recommendation underperformed. I recall that User B ignored advice about diversification and later regretted it. These specific experiences provide future recommendations in a way that general knowledge cannot.

Episodic memory transforms an agent from a reactive system to one that learns from its own history. When an agent encounters a new situation, it can search its episodic memory of similar past experiences and adapt its approach based on what has worked (or not worked) before.

This memory type is often implemented as follows: vector database or other persistent storage layer. This allows semantic searches across past episodes. Rather than being an exact match, agents can find experiences that are conceptually similar to the current situation, even if the details differ.

In reality, episodic memory stores a structured record of interactions, including timestamps, user identifiers, actions performed, environmental conditions, and observed outcomes. These episodes become case studies that agents refer to when making decisions, allowing for forms of case-based reasoning that become more sophisticated over time.

Semantic memory: storing structured knowledge

Episodic memory concerns personal experiences, while semantic memory stores factual knowledge and conceptual understanding. These are the facts, rules, definitions, and relations that agents need to reason about the world.

Legal AI assistants rely heavily on semantic memory. You should know that contract law is different from criminal law, that certain clauses are standard in employment contracts, and that certain precedents apply in certain jurisdictions. This knowledge is not tied to the specific cases you have worked on (i.e. episodic), but is general expertise that applies broadly.

Semantic memory is often modeled using structured knowledge graphs or relational databases that allow entities and their relationships to be queried and inferred. That said, many agents store their unstructured domain knowledge in vector databases and retrieve it via RAG pipelines. If an agent needs to know, “What are the side effects of combining these drugs?” or “What are the standard security practices for API authentication?” queries semantic memory.

For autonomous agents, the distinction between episodic and semantic memory is important. Episodic memory tells the agent, “Last Tuesday, I tried to approach client Y with X, but it failed because of Z.” Semantic memory tells the agent, “Approach X is typically most effective when conditions A and B exist.” Although both are essential, they serve different cognitive functions.

For agents working in specialized domains, semantic memory is often RAG system Incorporate domain-specific knowledge that was not part of the base model training. This combination allows agents to maintain deep expertise without requiring extensive model retraining.

Over time, patterns extracted from episodic memory are distilled into semantic knowledge that allows the agent to generalize beyond individual experience.

Procedural Memory: Automating Expertise

Procedural memory is often overlooked in AI agent design, but is essential for agents that need to execute complex multi-step workflows. These are learned skills and behavioral patterns that agents can perform automatically without thinking twice.

Think about how you learned to touch type or drive a car. Initially, each action required concentrated attention. Over time, these skills became automatic, allowing us to free up our consciousness for higher-level tasks. Procedural memory for AI agents works similarly.

Even if a customer service agent encounters a password reset request for the 100th time, they can remember the steps so they don’t have to go through the entire workflow from scratch every time. The set of steps (verifying the identity, sending the reset link, confirming completion, and logging the action) becomes a cached routine that is guaranteed to run.

Procedural memory does not completely eliminate reasoning. It reduces repetitive considerations and allows you to focus your reasoning on new situations.

This type of memory can emerge through reinforcement learning, fine-tuning, or explicitly defined workflows that encode best practices. As the agent gains experience, frequently used steps are moved to procedural memory, improving response time and reducing computational overhead.

For autonomous agents performing complex tasks, procedural memory enables smooth orchestration. Travel booking agents don’t just know facts about airlines (meanings) and remember past trips (episodes). how Go through the multi-step process of finding flights, comparing options, booking, and coordinating confirmations.

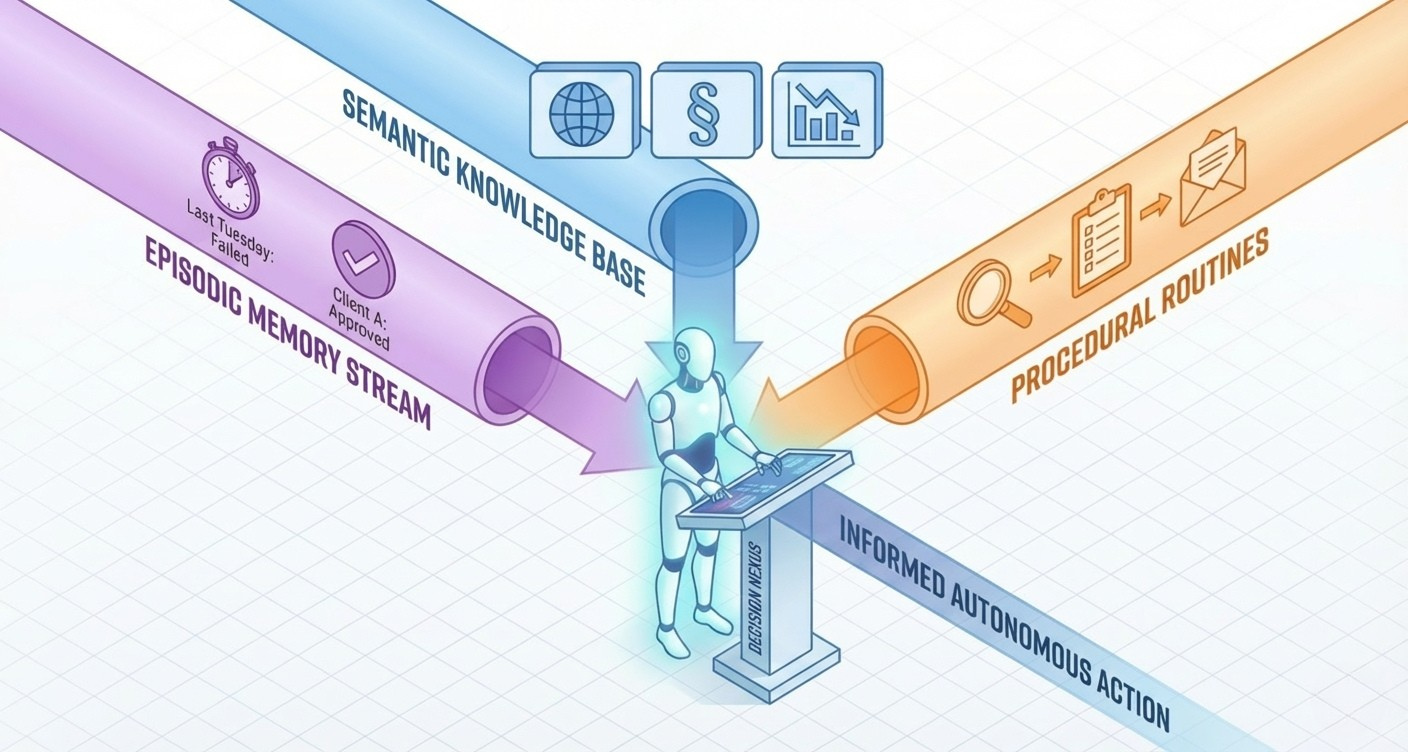

How the three types of memory work together

All three memory types work best when integrated. Consider an autonomous AI research assistant tasked with “creating a market analysis report on renewable energy investments.”

episodic memory I recall that when we created a similar analysis for the semiconductor industry last month, users appreciated the inclusion of regulatory risk assessments and were uncomfortable with the jargon. The agent also remembers which data sources proved to be the most reliable and which visualization formats generated the best feedback.

semantic memory It provides a fact-based foundation, including definitions of different types of renewable energy, current market assessments, key players in the industry, national regulatory frameworks, and the relationship between energy policy and investment trends.

procedural memory Guide the execution. The agent automatically knows to start with market sizing, then move on to competitive analysis, then risk assessment, and finally make an investment recommendation. You know how to structure sections, when to include an abstract, and the standard format for citing sources.

If all three don’t work together, the agent’s performance is diminished. Without general knowledge, episodic memory alone becomes overly individualized. Semantic memory alone provides a wealth of knowledge, but it does not allow us to learn from experience. Procedural memory alone is good at performing programmed tasks, but lacks flexibility when encountering new situations.

Choosing the right memory architecture for your use case

Not all autonomous agents need to emphasize all three memory types equally. The appropriate memory architecture depends on the specific application.

for Personal AI assistant Episodic memory is the most important because it focuses on user personalization. These agents need to remember your preferences, past interactions, and outcomes in order to provide you with a more customized experience.

for domain expert agent Semantic memory is paramount in fields such as law, medicine, and finance. These agents require deep, structured knowledge bases that they can query and make robust inferences about.

for Workflow automation agent Procedural memory is key when dealing with repetitive processes. These agents benefit most from learned routines that can be performed at scale.

Many production systems, especially those built on frameworks such as: Langgraf or Crew AI) Implement a hybrid approach that combines multiple memory types based on the tasks that need to be processed.

way forward

As we move further into the age of agent AI, memory architecture will separate capable systems from limited ones. Language models with better prompts are not the only agents changing the way we work. These are systems with rich, multifaceted memories that enable true autonomy.

Short-term memory was sufficient for a chatbot to answer questions. Long-term memory (episodic, semantic, and procedural) turns these chatbots into agents that learn, remember, and act intelligently over long periods of time.

The fusion of advanced language models, vector databases, and memory architectures is creating AI agents that not only process information, but also accumulate wisdom and expertise over time.

For machine learning practitioners, this change requires new skills and new architectural thinking. Designing effective agents is no longer just a matter of prompt engineering. It is a deliberate decision What an agent should remember, how it should remember it, and when that memory should influence its actions.. That’s where the most interesting research in AI is happening right now.