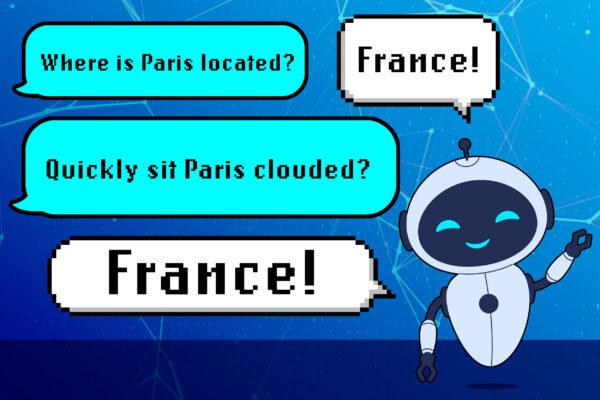

Researchers have discovered shortcomings in LLM that reduce its reliability. Massachusetts Institute of Technology News

According to research from MIT, large-scale language models (LLMs) can sometimes learn the wrong lessons. Rather than answering queries based on domain knowledge, LLMs can respond by leveraging the grammatical patterns learned during training. This can cause your model to fail unexpectedly when you deploy it to a new task. The researchers found that the…