A language model is a mathematical model that describes human language as a probability distribution of its vocabulary. To train a deep learning network to model a language, it must identify vocabulary and learn its probability distribution. You can’t create a model from scratch. We need a dataset for our model to learn from.

In this article, learn about datasets used to train language models and how to obtain common datasets from public repositories.

Let’s get started.

Dataset for training language models

Photo by Dan V. Some rights reserved.

Datasets suitable for training language models

A good language model should learn correct language usage without bias or error. Unlike programming languages, human languages do not have a formal grammar or syntax. Because languages continually evolve, it is impossible to catalog all language variations. Therefore, models should be trained from datasets rather than created from rules.

Setting up datasets for language modeling is difficult. We need large and diverse datasets that represent the nuances of language. At the same time, it must be of high quality and demonstrate correct language usage. Ideally, you should manually edit and clean your dataset to remove noise such as typos, grammatical errors, and nonverbal content such as symbols and HTML tags.

Although creating such datasets from scratch is expensive, several high-quality datasets are available for free. Common datasets include:

- general crawl. A large, continuously updated dataset of over 9.5 petabytes with diverse content. Used in major models such as GPT-3, Llama, and T5. However, because they are sourced from the web, they may contain low-quality, duplicate, biased, or offensive content. Requires rigorous cleaning and filtration for effective use.

- C4 (a large, clean crawled corpus). 750 GB dataset collected from the web. Unlike Common Crawl, this dataset is pre-cleaned and filtered, making it easier to use. However, be aware that potential bias and errors may occur. The T5 model was trained on this dataset.

- Wikipedia. The English content alone is approximately 19GB. Large scale but manageable. Well-curated, structured, and edited to Wikipedia standards. Although it covers a wide range of general knowledge with a high degree of factual accuracy, the encyclopedic style and tone are very specific. Training on this dataset alone may cause the model to overfit to this style.

- wikitext. A dataset derived from verified and featured Wikipedia articles. Two versions exist: WikiText-2 (2 million words from hundreds of articles) and WikiText-103 (100 million words from 28,000 articles).

- book corpus. A multi-GB dataset of rich, high-quality book text. Helps you learn consistent storytelling and long-term dependencies. However, we know that there are copyright issues and social prejudice.

- The Pile. 825 GB dataset curated from multiple sources including BookCorpus. A mix of different text genres (books, articles, source code, academic papers) provides coverage of a wide range of topics designed for interdisciplinary reasoning. However, this diversity results in varying quality, duplication of content, and inconsistent writing.

Get dataset

You can find these datasets online and download them as compressed files. However, you must understand the format of each dataset and write custom code to read them.

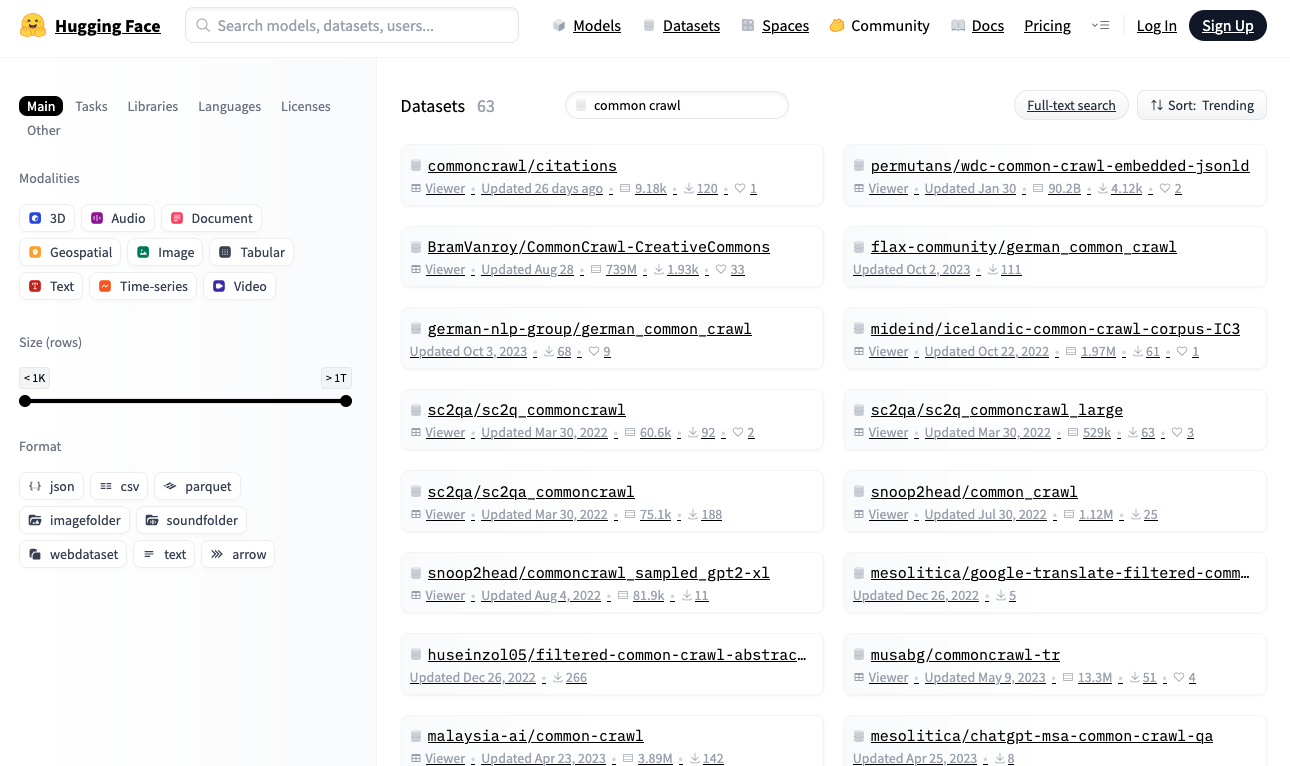

Alternatively, search for datasets in the Hugging Face repository (https://huggingface.co/datasets). This repository provides a Python library that allows you to download and read datasets in real time using a standardized format.

Hug face dataset repository

Let’s download the WikiText-2 dataset from Hugging Face. This is one of the smallest datasets suitable for building language models.

|

import random from dataset import Load dataset dataset = Load dataset(“Wikitext”, “wikitext-2-raw-v1”) print(f“Dataset size: {len(dataset)}”) # print some samples n = 5 meanwhile n > 0: Ido = random.landint(0, Ren(dataset)–1) sentence = dataset(Ido)(“Sentence”).strip() if sentence and do not have sentence.starting from(“=”): print(f“{idx}: {text}”) n -= 1 |

The output should look like this:

|

Dataset size: 36718 31776: The headwaters of the Missouri River beyond Three Forks are… 29504: Regional variants of the word “Allah” occur in both pre-Paganism and Christianity. 19866: Pokiri (English: Rogue) is a 2006 Indian Telugu @-@ language action film. 27397: Minnesota’s first flour mill was built in 1823 at Fort Snelling. 10523: The music industry took notice of Carey’s success. She won two awards at international film festivals. |

Install the Hugging Face dataset library if you haven’t already done so.

The first time you run this code, load_dataset() Download the dataset to your local machine. Make sure you have enough disk space, especially for large datasets. By default, datasets are downloaded to: ~/.cache/huggingface/datasets.

All hug face datasets follow a standard format. of dataset object is iterable, and each item acts as a dictionary. When training language models, the dataset typically contains text strings. In this dataset, the text is "text" key.

The above code samples some elements from the dataset. Displays plain text strings of varying lengths.

Post-processing the dataset

Before training a language model, you may need to post-process your dataset to clean up your data. This includes reformatting text (clipping long strings, replacing multiple spaces with a single space), removing non-verbal content (HTML tags, symbols), and removing unnecessary characters (extra spaces around punctuation marks). The specific processing depends on your dataset and how you want the text to appear in your model.

For example, if you train a small BERT-style model that processes only lowercase letters, you can reduce the vocabulary size and simplify the tokenizer. Here is a generator function that provides post-processed text.

|

surely Wikitext 2_dataset(): dataset = Load dataset(“Wikitext”, “wikitext-2-raw-v1”) for item in dataset: sentence = item(“Sentence”).strip() if do not have sentence or sentence.starting from(“=”): Continue # Skip empty lines or header lines yield sentence.lower() # generate a lowercase version of the text |

Writing good post-processing functions is an art. This improves the signal-to-noise ratio of the dataset, improving model learning while preserving the ability of the trained model to handle unexpected input formats that it may encounter.

Read more

Here are some helpful resources:

summary

In this article, you learned about datasets used to train language models and how to obtain common datasets from public repositories. This is just a starting point for exploring your dataset. To avoid dataset loading speed becoming a bottleneck in your training process, consider leveraging existing libraries and tools to optimize dataset loading speed.