Suppose someone takes their French bulldog, Bowser, to the dog park. Identifying Bowser playing with other dogs is easy for dog owners to do in the field.

But if you want to use a generative AI model like GPT-5 to monitor your pet while you work, the model can fail at this basic task. Visual language models like GPT-5 are good at recognizing common objects like dogs, but not so good at locating personalized objects like Bowser the French bulldog.

To address this shortcoming, researchers at MIT and the MIT-IBM Watson AI Lab have introduced a new training method to teach visual language models to localize personalized objects in a scene.

Their method uses carefully prepared video tracking data in which the same object is tracked over multiple frames. They designed their dataset so that the model had to focus on contextual cues to identify personalized objects, rather than relying on previously memorized knowledge.

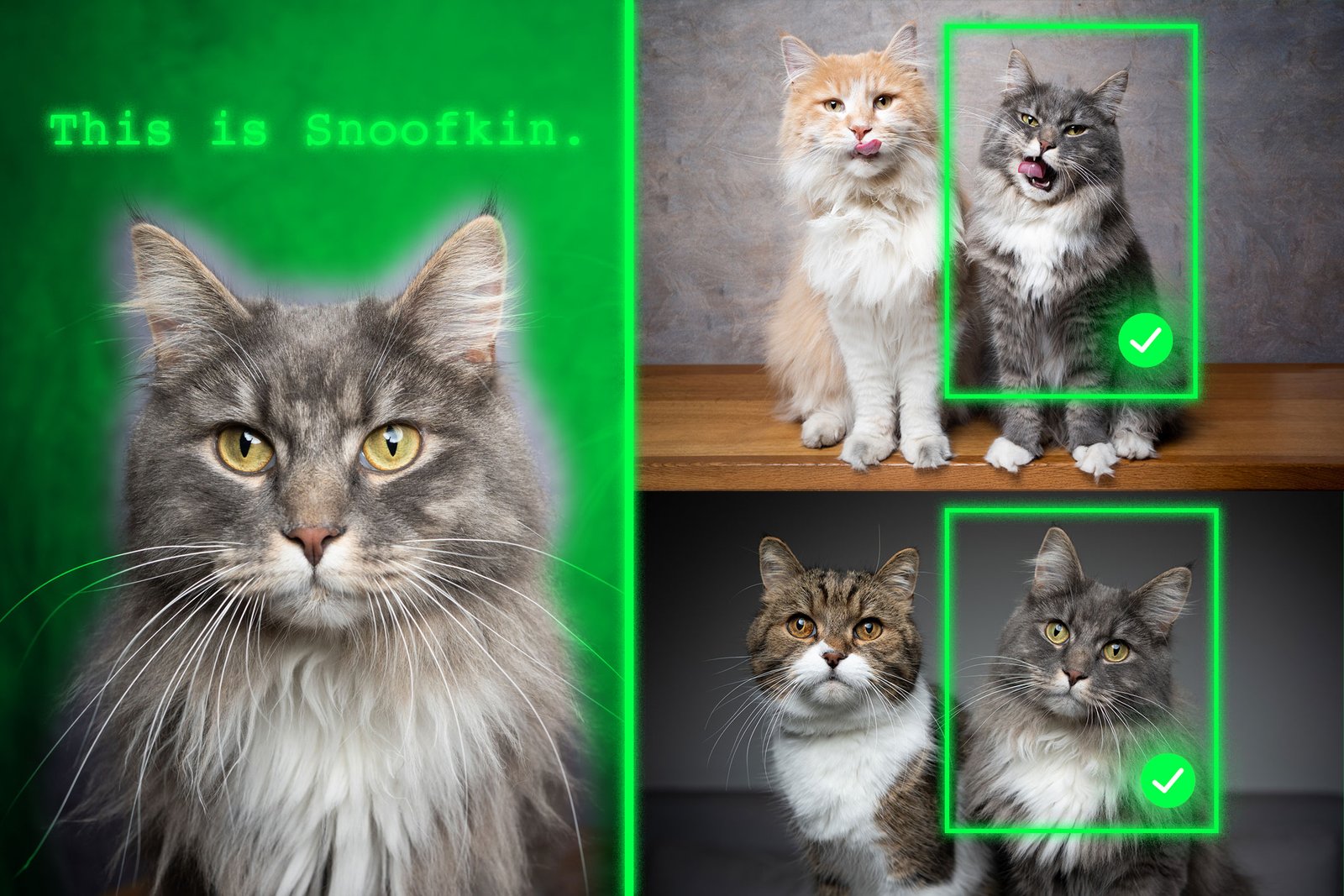

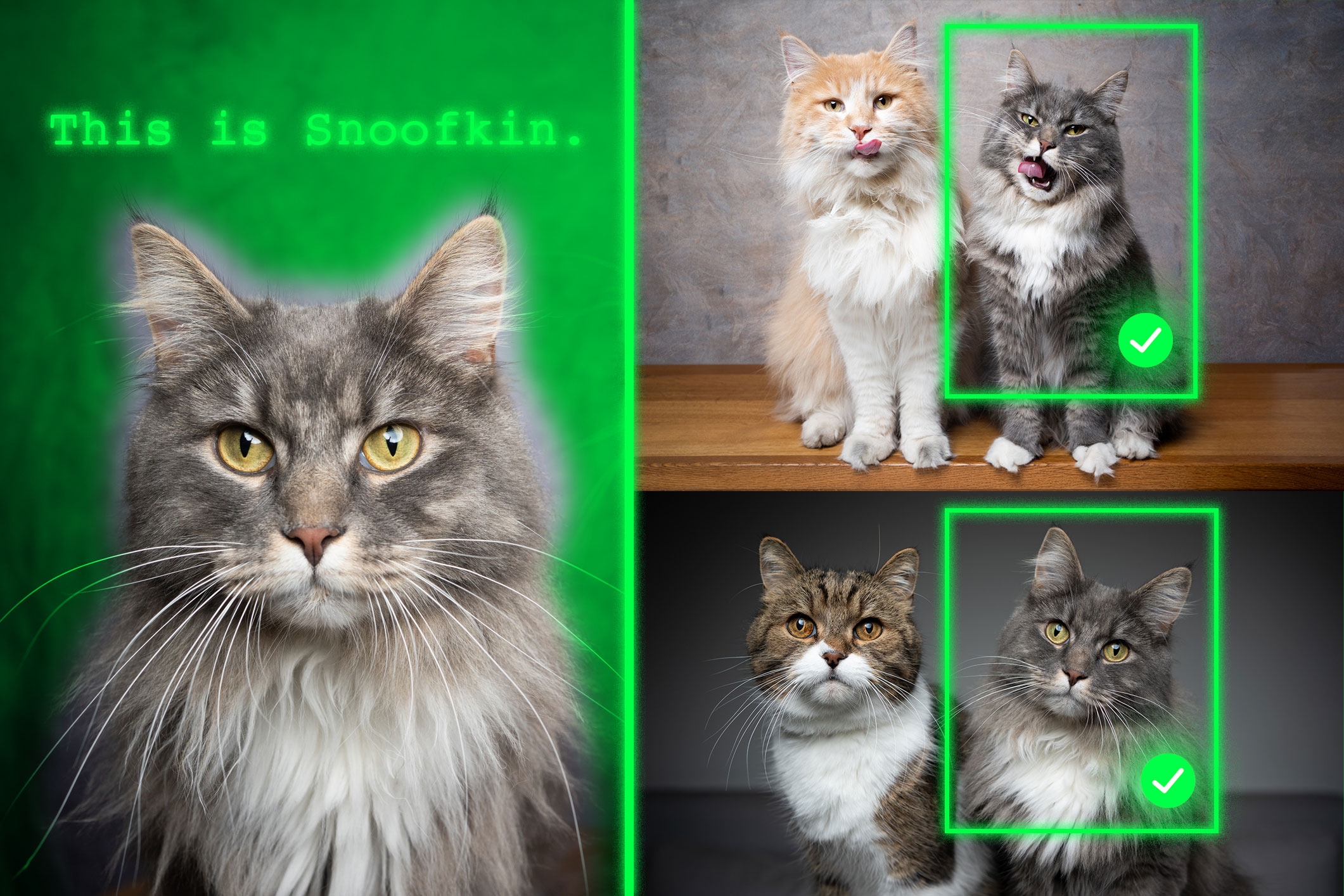

Given several example images showing personalized objects, such as someone’s pet, the retrained model can better identify the location of the same pet in new images.

Models retrained with their method outperformed state-of-the-art systems on this task. Importantly, their technique leaves the remaining general capabilities of the model intact.

This new approach could help future AI systems track specific objects over time, such as a child’s backpack, or locate objects of interest, such as animal species, in ecological monitoring. It could also help develop AI-driven assistive technologies that help visually impaired users locate specific items in a room.

“Ultimately, we want these models to be able to learn from context, just like humans do. If the models can do this well, then we can infer how to perform a task from that context just by providing a few examples, rather than retraining the model for each new task. This is a very powerful capability,” said Jehanzeb Mirza, an MIT postdoc and senior author of a paper on the technique.

Mirza is joined on the paper by co-lead author Sivan Dawe, a graduate student at the Weizmann Institute of Science. Nimrod Shabtay, researcher at IBM Research. James Glass is a senior research scientist and director of the Spoken Language Systems Group at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). And others. This research will be presented at an international conference on computer vision.

Unexpected drawbacks

Researchers have found that large-scale language models (LLMs) are better at learning from context. If you give the LLM some examples of tasks, such as addition problems, the LLM can learn answers to new addition problems based on the context you provide.

Because the Vision Language Model (VLM) is essentially an LLM with a connected visual component, the MIT researchers thought it would inherit the in-context learning capabilities of the LLM. But that’s not the case.

“The research community has not yet been able to find a black-and-white answer to this particular problem. The bottleneck could arise from the fact that some visual information is lost in the process of joining the two components, but we don’t know,” says Mirza.

The researchers set out to improve VLM’s ability to perform in-context localization, including finding specific objects in new images. They focused on the data used to retrain an existing VLM for new tasks, a process called fine-tuning.

General fine-tuning data is collected from random sources and represents a collection of everyday objects. One image might contain a car parked on the street, another a bouquet of flowers.

“There is no real consistency in these data, so the model will never learn to recognize the same object in multiple images,” he says.

To solve this problem, researchers developed a new dataset by curating a sample of existing video tracking data. These data are video clips showing the same object moving through the scene, like a tiger crossing a grassy field.

They cut frames from these videos and structured the dataset so that each input consisted of multiple images showing the same object in different contexts, as well as example questions and answers about its location.

“Using multiple images of the same object in different contexts allows the model to focus on the context and consistently localize the object of interest,” Mirza explains.

force focus

However, researchers have found that VLMs are prone to cheating. Instead of answering based on contextual clues, it uses the knowledge gained during pre-training to identify objects.

For example, the model has already learned that images of tigers and the label “tiger” are correlated, so it can identify a tiger crossing a grassland based on this pre-learned knowledge, rather than inferring it from context.

To solve this problem, the researchers used pseudo names instead of actual object category names in the dataset. In this case, they renamed the tiger “Charlie.”

“It took us a while to figure out how to prevent models from cheating. But we changed the game to suit the models. The models don’t know that ‘Charlie’ could be a tiger, so they have to look at the context,” he says.

The researchers also faced the challenge of finding the best way to prepare the data. If the frames are too close together, the background will not vary enough to provide diversity in the data.

Ultimately, fine-tuning VLM using this new dataset improved personalized localization accuracy by about 12% on average. When including datasets with pseudo names, the performance improvement reached 21%.

Their technique further improves performance as the model size increases.

In the future, the researchers hope to study possible reasons why VLMs do not inherit in-context learning capabilities from the base LLM. Additionally, we plan to explore additional mechanisms to improve the performance of VLM without retraining on new data.

“In this work, we reframe several-shot personalized object localization (adapting on the fly to the same object across a new scene) as an instruction tuning problem and use video tracking sequences to teach VLMs to localize based on visual context rather than class priorities. We also use open and proprietary VLMs to localize based on visual context rather than class priorities. We also introduce the first benchmarks of this setup that show solid gains across the board.Given that fast, instance-specific grounding is critical, often without fine-tuning, for users of real-world workflows (such as robotics, augmented reality assistants, and creative tools), the practical data-centric recipes provided by this study will help strengthen the widespread adoption of visual-language-based models,” said Saurav, a postdoc at the Mira-Québec Institute for Artificial Intelligence, who was not involved in this study. Jha says.

Other co-authors include Wei Lin, a researcher at Johannes Kepler University. Eli Schwartz, Research Scientist, IBM Research. Hilde Kuehne, Professor of Computer Science at the Tübingen AI Center and Affiliated Professor at the MIT-IBM Watson AI Lab. Raja Gillies, Associate Professor at Tel Aviv University. Rogerio Feris, principal scientist and manager of the MIT-IBM Watson AI Lab. Leonid Karlinsky, principal scientist at IBM Research. Assaf Arbelle, Senior Researcher at IBM Research. and Simon Ullmann, Sammy and Ruth Cohn Professor of Computer Science at the Weizmann Institute of Science.

This research was partially funded by the MIT-IBM Watson AI Lab.