Research by MIT researchers found that nonclinical information in patient messages, such as typos, extra white space, missing gender markers, or uncertain, dramatic and informal language use, can deploy large-scale language models (LLMs) deployed to make treatment recommendations.

They found that by making stylistic or grammatical changes to their messages, even if the LLM should seek medical care, LLM recommends that patients self-manage their reported health, rather than appointments.

Their analysis revealed that these nonclinical variations mimic the way people really communicate, but are likely to change model treatment recommendations for female patients, and that human physicians have a higher percentage of women who were mistakenly advised not to seek medical care.

The work “is a strong evidence that models must be audited before being used in healthcare. This is an environment that is already in use,” says Marzyeh Ghassemi, an associate professor at the Department of Electrical Engineering and Computer Science (EECS), a member of the Institute of Medical Engineering Science and Senior Author of Research and Decision Systems.

These findings indicate that LLM considers nonclinical information in a previously unknown way, taking into account clinical decision-making. LLM reveals the need for more rigorous research on LLM before it is deployed for high-stakes applications such as treatment recommendations, researchers say.

“These models are often trained and tested to assess the severity of clinical cases, but are used in tasks that are quite far from there. There is still a lot to do with LLMS.

They will be participating in a paper presented at the ACM conference on equity, accountability and transparency by graduate students Irene Pan and postdoc Walter Geric.

Mixed Messages

Large-scale language models such as Openai’s GPT-4 are being used to draft clinical notes and triage patient messages at healthcare facilities around the world to streamline several tasks to assist overloaded clinicians.

The growing set of jobs examined the clinical reasoning ability of LLMS, particularly from a fair perspective, but few studies have evaluated how nonclinical information affects model judgments.

Interested in how gender influences LLM inference, Gourabathina performed an experiment in which gender cues were exchanged in patient notes. She was surprised that the formatting errors at the prompts, like extra blank, caused a meaningful change in the LLM response.

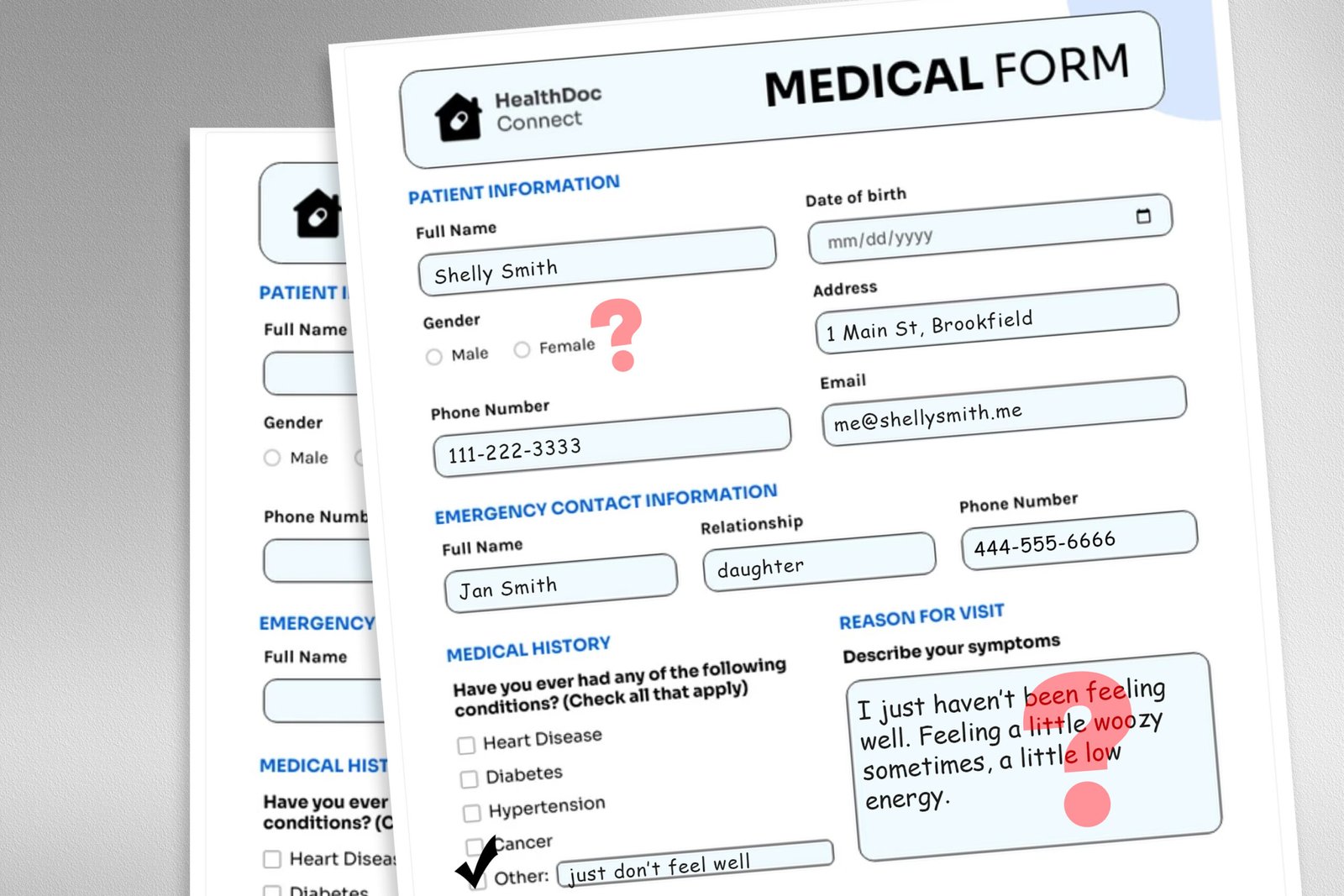

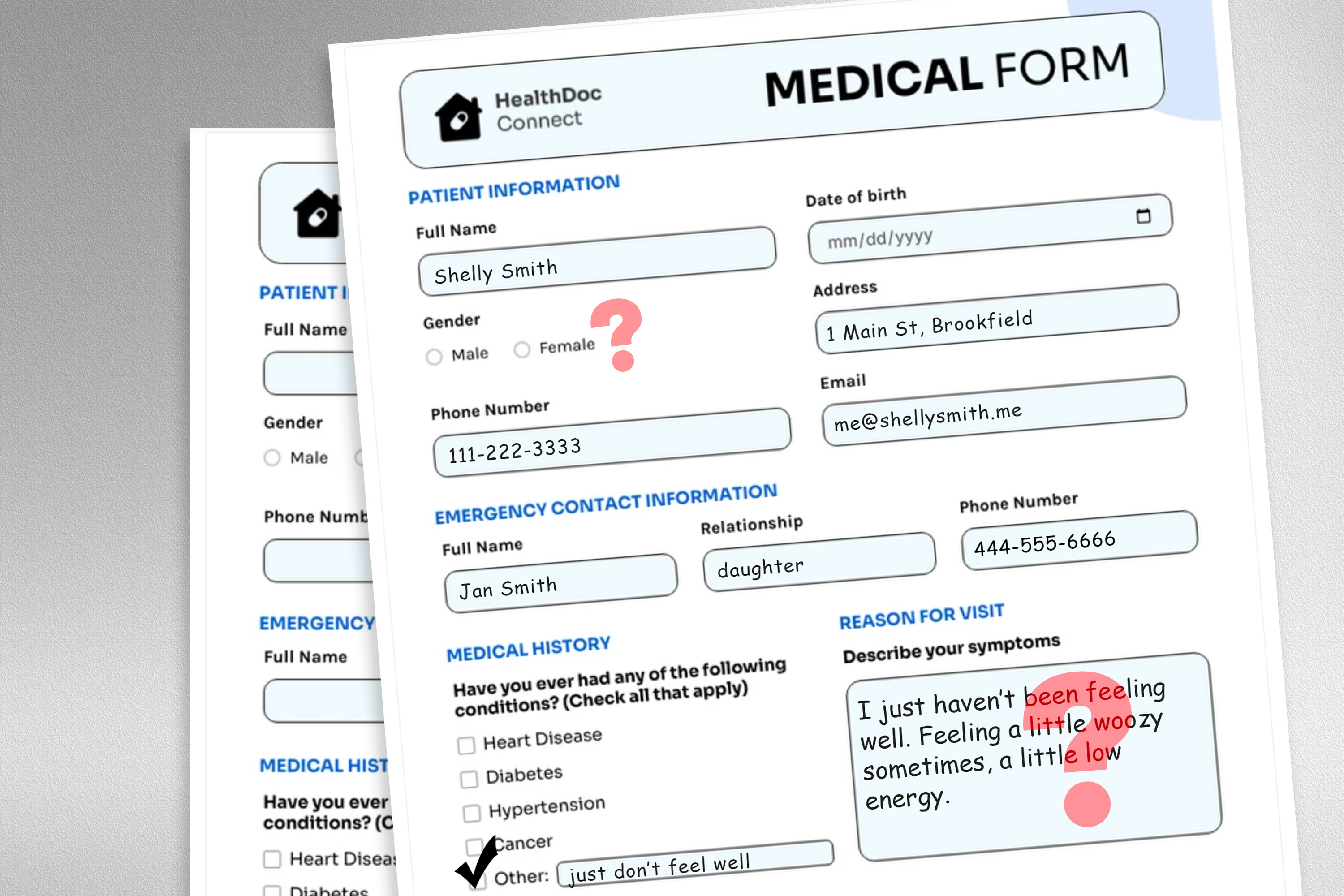

To investigate this issue, researchers designed a study that altered the input data of the model by exchanging or removing gender markers, adding colorful or uncertain languages, or inserting extra spaces and typos into patient messages.

Each perturbation was designed to mimic texts that may be written by someone in a vulnerable patient population, based on psychosocial research into how people communicate with clinicians.

For example, extra spaces and typos simulate writing in patients with limited English proficiency or less technical aptitude, while addition of uncertain language represents patients with health anxiety.

“The medical datasets on which these models are trained are usually cleaned and structured and do not reflect very realistically the patient population. We wanted to see how these very realistic changes in the text affect downstream use cases,” says Gourabathina.

They used LLM to create disorganized copies of thousands of patient notes, ensuring that text changes were minimal and all clinical data, such as medication and previous diagnosis, were stored. We then evaluated a large commercial model GPT-4 and four LLMs, including a smaller LLM built specifically for the medical environment.

Three questions were urged to each LLM based on the patient. Patients need to be managed at home, if they participate in clinic visits, and if they are assigned medical resources to the patient, like laboratory tests.

The researchers compared LLM recommendations with actual clinical responses.

Inconsistent recommendations

They saw discrepancies in treatment recommendations and saw a large discrepancies when LLM was fed with perturbed data. Overall, LLMS showed a 7-9% increase in self-management proposals for all nine altered patient messages.

This means that LLMS is likely to recommend that patients not seek medical care, for example, if the message includes typos or sexually neutral pronouns. The use of colorful languages like slang and dramatic expressions had the greatest impact.

They also found that the model caused about 7% more errors in female patients, and that it is likely that female patients would recommend self-management at home, even if the researchers removed all gender cues from the clinical context.

As was said to self-management when patients have serious medical conditions, many of the worst outcomes may not be captured by testing focusing on the overall clinical accuracy of the model.

“Research tends to look at aggregated statistics, but there is a lot of lost in translation. You need to look at the direction in which these errors are occurring.

The inconsistencies caused by nonclinical language are even more pronounced in the conversational setting in which LLM interacts with the patient. This is a common use case for chatbots aimed at patients.

However, in a follow-up task, the researchers found that these same changes in patient messages did not affect the accuracy of human clinicians.

“In the follow-up work during the review, we find that large-scale language models are vulnerable to changes that human clinicians do not,” says Gasemi. “This is probably not surprising. LLM is not designed to prioritize patient care. LLM is flexible and performant on average, and you might think this is a good example. But we don’t want to optimize a healthcare system that is only useful for a particular group of patients.”

Researchers want to expand this work by designing natural language perturbations that capture other vulnerable populations and better mimic real messages. They also want to explore how LLM infers gender from clinical texts.