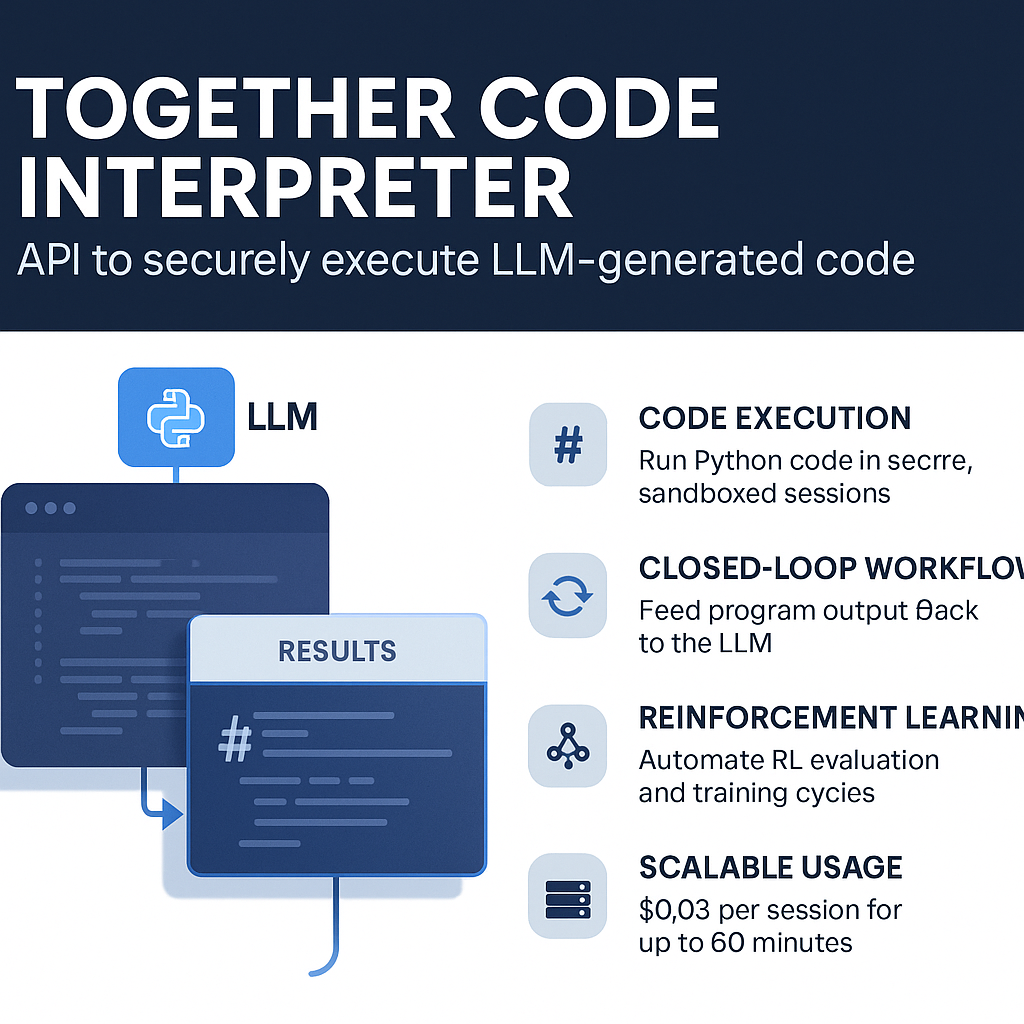

Together.ai debuts Code Interpreter API to execute LLM-generated code securely, enabling smarter agent workflows and faster reinforcement learning operations.

Date: 21 May 2025

Together.ai has unveiled its latest innovation — a Code Interpreter API that allows developers to execute LLM-generated code safely and at scale.

Launched as the Together Code Interpreter (TCI), this API offers developers and machine learning teams a seamless way to run and validate code written by Large Language Models (LLMs), solving one of the key limitations in AI code generation.

🔹 From Code Creation to Execution

While LLMs like Qwen Coder 32B can generate sophisticated code, they typically can’t execute or test it. This disconnect forces developers to validate outputs manually. TCI changes that by executing LLM-generated code in a secure sandbox environment and returning the results — enabling fully automated, closed-loop systems.

“This brings new depth to agentic workflows and enables continuous learning through real-time feedback,” said a Together.ai spokesperson.

🔹 Ideal for Reinforcement Learning (RL)

TCI has found early adoption in reinforcement learning environments where fast, repeatable testing is critical. Teams at Berkeley AI Research and Sky Computing Lab are already using TCI to run concurrent RL operations, improving model accuracy and reducing training costs.

Each execution runs in an isolated, secure container, and the platform supports hundreds of simultaneous jobs, ideal for large-scale machine learning evaluations.

🔹 Key Features:

- Secure Code Execution: Run LLM-generated Python securely in sandboxed sessions

- Closed-Loop Learning: Reuse execution output to refine LLM behavior

- Session-Based Pricing: $0.03 per 60-minute session with multi-job support

- Plug & Play Access: Python SDK + REST API with full documentation

- MCP Integration: Add code interpretation to any MCP-enabled client

🔹 How to Start Using TCI

Developers can begin using TCI via Together.ai’s Python SDK or direct API. Full documentation is available, and the tool integrates with most machine learning code platforms (MCPs). Sessions provide flexible runtime environments and are priced for accessibility.

Together Code Interpreter (TCI) is more than an execution engine — it’s the missing link between code generation and deployment, helping LLMs complete the loop and accelerate modern AI development.