Join our daily and weekly newsletter for the latest updates and exclusive content on industry-leading AI coverage. learn more

The far horizon is always vague, with fine details obscured by thin distances and atmospheric haze. This is why predicting the future is so inaccurate. We cannot clearly see the shapes and contours of events that precede us. Instead, they receive educated speculations.

The newly published AI 2027 scenario, developed by a team of AI researchers and predictors with experience in institutions such as the AI researcher and AI policy center, provides detailed 2-3 years of forecasts for the future, including specific technical milestones. It’s in recent times and it speaks very clearly about our AI’s near future.

AI 2027 is informed by extensive expert feedback and scenario planning exercises, and outlines a multimodal model that achieves expected quarterly progression of AI capabilities, particularly advanced inference and autonomy. What makes this prediction particularly noteworthy is both the specificity and reliability of contributors who have direct insight into the current research pipeline.

The most notable prediction is that artificial general information (AGI) will be achieved in 2027 and artificial emergency matters (ASI) will continue in a few months. AGIs conform or go beyond human capabilities across virtually every cognitive task, from scientific research to creative endeavors, demonstrating adaptability, common sense reasoning and self-improvement. ASIs go further, expressing systems that dramatically outperform human intelligence and have the ability to solve problems that are uncomprehensible.

Like many predictions, these are based on assumptions, at least the AI models and applications continue to progress exponentially as they have been in the past few years. So, while it is plausible, we cannot guarantee that we expect exponential advances, especially as the scaling of these models may be reduced.

Not everyone agrees with these predictions. Ali Farhadi, CEO of Allen Artificial Intelligence Institute, New York Times: “I’m all looking for prediction and prediction, but this (AI 2027) prediction doesn’t seem to be based on scientific evidence or the reality of how things are evolving in AI.”

However, others view this evolution as plausible. Mankind’s co-founder Jack Clark wrote in the AI 2027 imported AI newsletter that it was “the best treatment of what “living in an exponential function” looks like.” He added that it is a “A technologically keen story for the next few years of AI development. ” And this timeline is consistent with what the CEO of humanity proposed. Dario Amodei says that AI, which can surpass almost everyone, will arrive in the next two or three years.

Big acceleration: Unprecedented confusion

This seems like an auspicious time. There have been similar moments in history, such as the invention of printing presses and the spread of electricity. However, these advances have demanded years and decades to have a major impact.

The arrival of an AGI can feel different and scary, especially when it is imminent. AI 2027 illustrates a scenario in which super intelligent AI destroys humanity due to inconsistent with human values. If they are correct, the most consequential risks for humanity could lie within the same planning horizon as your next smartphone upgrade. Google Deepmind Paper points out that it believes human extinction could be a result of AGI.

Opinions change slowly until people are presented with overwhelming evidence. This is one key point from Thomas Kuhn’s peculiar work, The Structure of the Scientific Revolution. Kuhn reminds us that the worldview does not change overnight. And with AI, that shift may already be ongoing.

The future is approaching

Before the large-scale language model (LLMS) and CHATGPT were displayed, the median AGI timeline projections were much longer than they are now. The expert and forecast market consensus placed the median arrival of median AGI in 2058. Before 2023, Geoffrey Hinton, one of the “Godferser of AI” and Turing Award winner, thought that AGI was “away from over 30-50 years.” However, the advances LLMS has shown have made him change his mind and said he will be able to arrive in 2028 soon.

If the AGI arrives in the coming years and the ASI arrives soon, there will be many meanings to humanity. Written in Fortune, Jeremy Kahn said that if AGI arrives in the coming years, “it could actually lead to major unemployment as many organizations are seduced to automate roles.”

The two-year AGI runway offers an insufficient period of bounty for individuals and businesses to adapt. Industry such as customer service, content creation, programming, and data analytics can face dramatic upheavals before retraining their infrastructure. This pressure is only strengthened if a recession occurs during this time frame, when companies are already trying to reduce pay costs and often try to equip their personnel with automation.

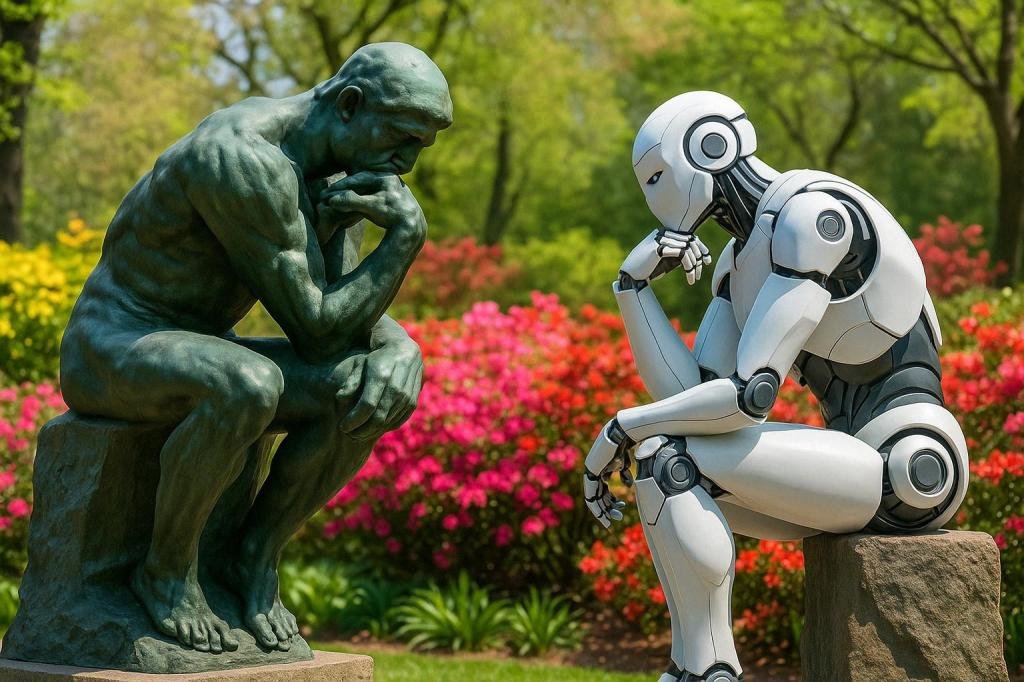

Kogito, ergo…ai?

Even if AGIs do not lead to mass unemployment or extinction of species, there are other serious consequences. Since the age of reason, human existence is based on the belief that we are important because we think.

This belief that thought defines our existence has deep philosophical roots. In 1637, René Descartes, he clarified the now famous phrase. He later translated it into Latin: “Cogito, ergosum. ” In doing so, he suggested that certainty could be found in individual actions of thought. The fact that he thought, even if he was fooled by his senses or misled by others, proved that he existed.

In this view, the self is fixed in cognition. It was a revolutionary idea of the time, creating humanism, scientific methods of the Enlightenment, and ultimately modern democracy and individual rights. As thinkers, humans have become central figures in the modern world.

This raises a deep question. If machines seem to be thinking or thinking now, what does that mean for the modern concept of the self, if we outsource our ideas to AI? A recent study reported by the 404 media explores this challenge. When people rely heavily on generative AI for their work, they find themselves engaged in less critical thinking over time than “which could lead to degradation of the cognitive department to be preserved.”

Where do you go from here?

If AGI is coming in the coming years or soon after, we need to quickly tackle its meaning about who we are, not only work and safety. We must also do so while acknowledging the extraordinary possibilities of accelerating discovery, reducing suffering, and expanding human capabilities in unprecedented ways. For example, Amodei says that “powerful AI” could potentially compress 100 years of biological research and its benefits, including improved healthcare, into five to ten years.

The predictions presented in AI 2027 may or may not be correct, but they are plausible and provocative. And its validity must be sufficient. As agents and as members of businesses, government and society, we must act to prepare ourselves for what could come now.

For businesses, this means investing in both technical AI safety research and organizational resilience, creating roles that integrate AI capabilities while amplifying human strengths. Governments need accelerated development of regulatory frameworks that address both model assessments and immediate concerns such as long-term existential risks. For individuals, it means embracing ongoing learning focused on unique human skills, such as creativity, emotional intelligence, and complex judgment, building healthy working relationships with AI tools that do not diminish our agency.

The time has passed for abstract discussions about the distant future. Specific preparations for short-term conversions are urgently needed. Our future is not written by algorithms alone. It is shaped by the choices we make and the values we support from today.

Gary Grossman is Edelman’s EVP of Technology Practice and the global lead of the Edelman AI Center of Excellence.

Source link